Stellen Sie sich eine Welt vor, in der Betrug mit höchster Präzision von künstlicher Intelliganz erkannt werden kann – transparent, ethisch korrekt und zuverlässig. Die Zusammenarbeit von Nexi und CROZ zeigt, wie wichtig die Etablierung eines AI-Governance-Frameworks inkl. MLOps Praktiken zur Realisierung fortschrittlicher Machine-Learning-Modelle für die Betrugserkennung ist.Nexi, ein führender Anbieter von Online-Zahlungslösungen, stand während der Entwicklung seines Fraud-Detection Systems vor vielen Herausforderungen, wie z.B. Datenqualität, Sicherheit, sich entwickelnden Betrugsmethoden und Modellverzerrungen (Bias). Um die Leistungsfähigkeit und Zuverlässigkeit der Modelle zu sichern sind effektive MLOps, menschliche Expertise und die Einhaltung von Vorschriften entscheidend.

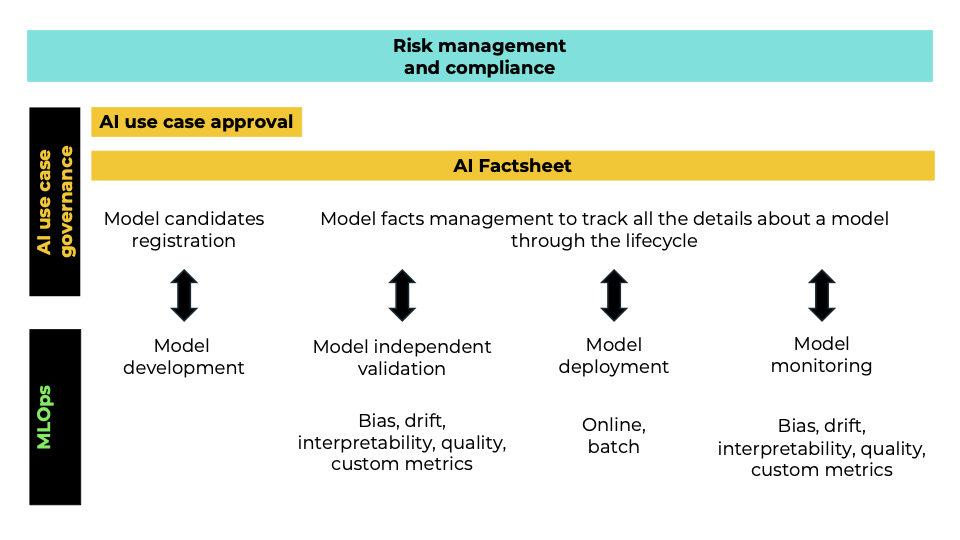

MLOps Praktiken sind mittlerweile unverzichtbar geworden, müssen aber durch AI-Governance ergänzt werden. AI-Governance bildet die Grundlage für vertrauenswürdige KI-gestützte-Systeme und wahrt die regulatorische Konformität. Sie ergänzt den MLOps-Lebenszyklus, indem sie ein technisches Monitoring in ein regulatorisches Rahmenwerk integriert.

AI-Governance umfasst die Definition von Prozessen, Standards und Rahmenwerken, um sicherzustellen, dass AI-Systeme verantwortungsvoll, ethisch und regelkonform entwickelt und eingesetzt werden (mehr dazu unter diesem Link).Dazu gehören ethische Leitlinien, die Fairness, Transparenz und Verantwortlichkeit fördern und sicherstellen, dass im Rahmen der KI Nutzung die Menschenrechte und gesellschaftliche Werte respektiert werden. Die regulatorische Konformität ist dabei essenziell und unterstützt Organisationen bei der Einhaltung gesetzlicher Vorgaben wie z.B. dem EU AI Act, der spezifische Anforderungen an Hochrisiko-AI-Anwendungen stellt. Ein wirksames Risikomanagement ist unerlässlich, um potenzielle Risiken wie Verzerrungen (Bias), Datenschutzprobleme und unerwünschte Auswirkungen zu identifizieren und zu minimieren. AI-Governance erfordert die Zusammenarbeit unterschiedlichster Stakeholder – darunter Entwickler, Anwender, Regulierungsbehörden und Ethikexperten, um einen ganzheitlichen Ansatz zu schaffen, der mit unseren gesellschaftlichen Erwartungen übereinstimmt und das Vertrauen der Öffentlichkeit in KI-gestützte Systeme stärkt.

Gestaltung und Umsetzung von AI-Governance mit IBM watsonx.governance

Bei CROZ verfolgen wir einen iterativen Ansatz, bei dem MLOps und die Konzeption von AI-Governance als sich kontinuierlich weiterentwickelnder Kreislauf gedacht sind. Unsere Prozesse werden dabei laufend auf Basis praktischer Erfahrungen und Community-Feedback optimiert. Die Methodik beginnt mit der Definition eines AI-Use-Cases und der Identifikation aller beteiligten Rollen. Der Fokus liegt auf Risikobewertung und der Einbindung relevanter Stakeholder. Governance steht dabei stets an erster Stelle – wir stellen sicher, dass AI-Anwendungen ethisch verantwortungsvoll genutzt und nahtlos in bestehende Governance- und Risikomanagementstrukturen eingebettet werden. Erst wenn diese Strukturen etabliert sind, erfolgt die Umsetzung der MLOps-Prozesse mit Fokus auf eine robuste Entwicklung und Bereitstellung von Modellen.

Wir halten eine kontinuierliche Rückkopplung zwischen MLOps und AI-Governance aufrecht, da sich sowohl Modelle als auch regulatorische Anforderungen ständig weiterentwickeln. Dieser iterative Ansatz gewährleistet, dass unsere Projekte den aktuellen Standards entsprechen und flexibel auf zukünftige Veränderungen reagieren können. Durch die Kombination technischer Exzellenz mit einem starken Governance-Mindset stellen wir sicher, dass unsere AI-Initiativen sowohl innovativ als auch compliant sind – und damit den Anforderungen eines dynamischen regulatorischen Umfelds gewachsen.

Betrachten wir ein praktisches Beispiel zur Betrugserkennung. Dieses Beispiel nutzt öffentlich verfügbare Daten auf Kaggle und dient ausschließlich Demonstrationszwecken.

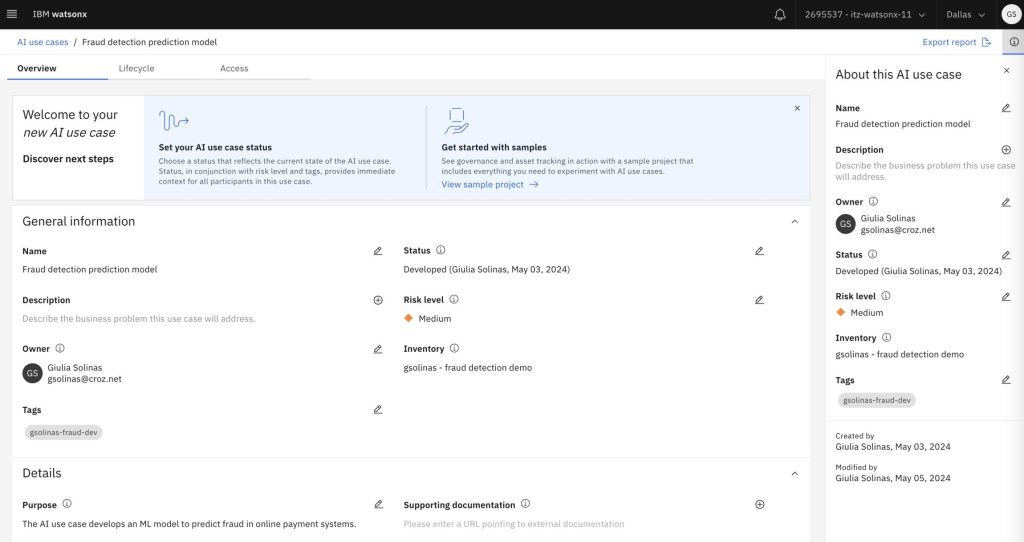

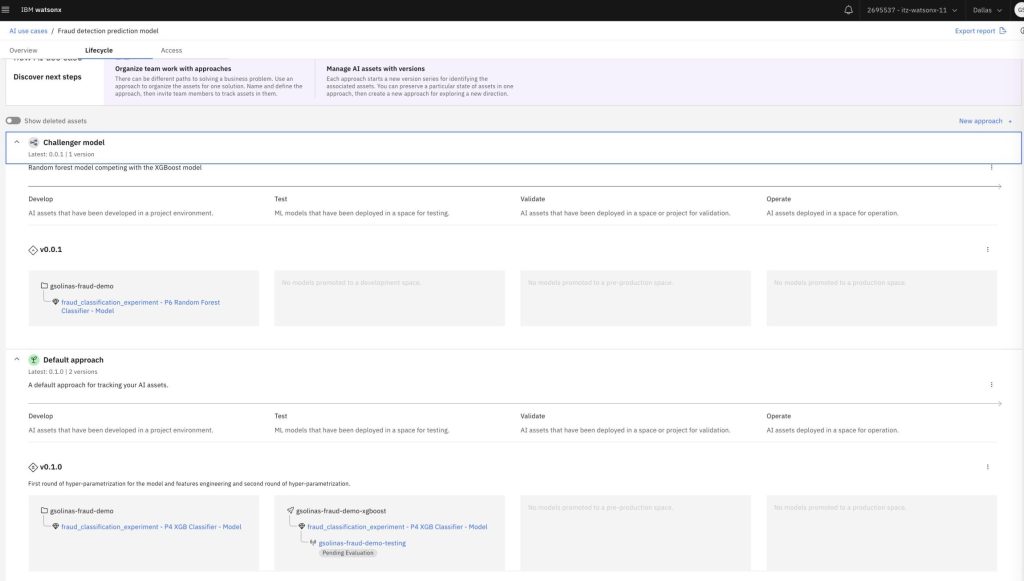

Der erste Schritt ist die gemeinsame Diskussion des AI Use Cases zur Betrugserkennung mit den relevanten Stakeholdern. In IBM watsonx.governance wird dafür ein AI Use Case eröffnet, der das zugehörige Risiko, eine Beschreibung des eingesetzten Modells im fachlichen Kontext sowie den Model Owner dokumentiert. Letzterer kann unterschiedlichen Rollen mit fachlichem oder technischem Hintergrund zugeordnet sein. Die zentrale Aufgabe besteht darin, Geschäftsinformationen mit regulatorischen Anforderungen und den technischen Modellmetriken in Einklang zu bringen.

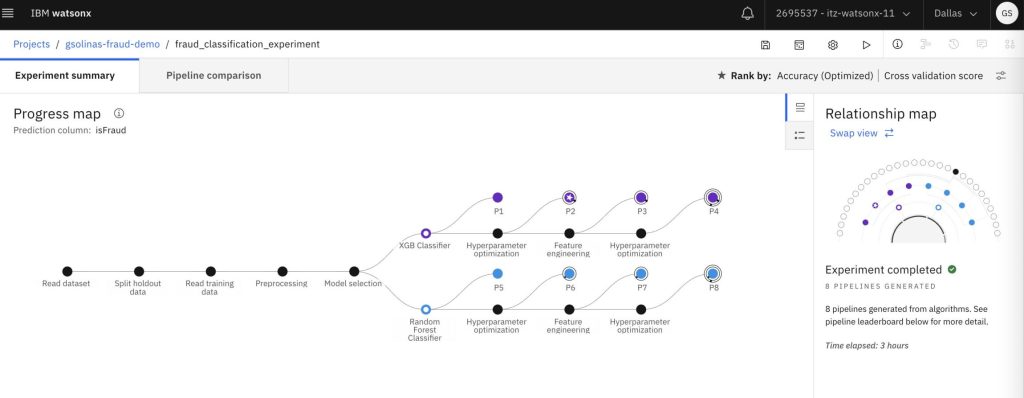

Die folgenden zwei Schritte bilden die Grundlage gängiger MLOps-Praktiken und Data-Science-Projekte. Dazu gehören modulare Pipelines für Sample Spits, Feature-Auswahl und Experimente mit verschiedenen Modellen zur Erkennung betrügerischer Transaktionen.

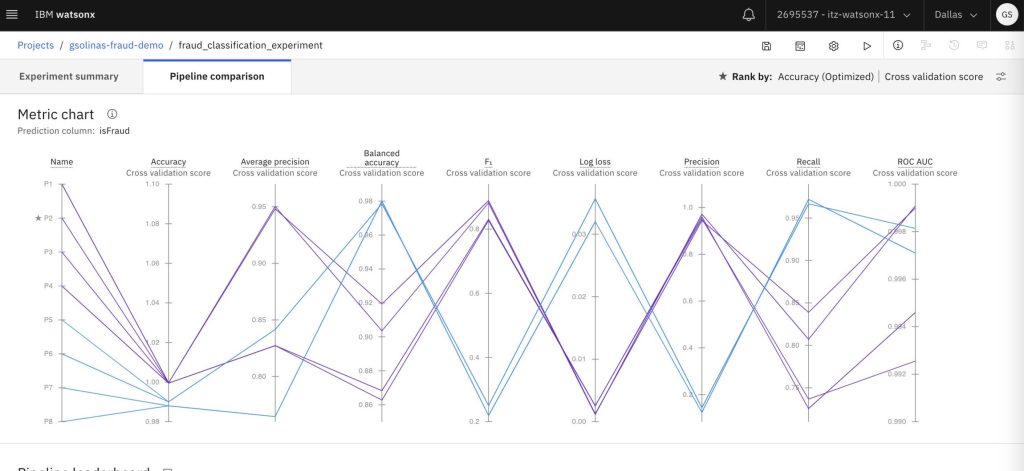

Ein Data Scientist oder ML Engineer beurteilt anschließend die Performance und regulatorische Konformität der verschiedenen Experimente, um in die nächste Projektphase für Modelltests und Validierung überzugehen.

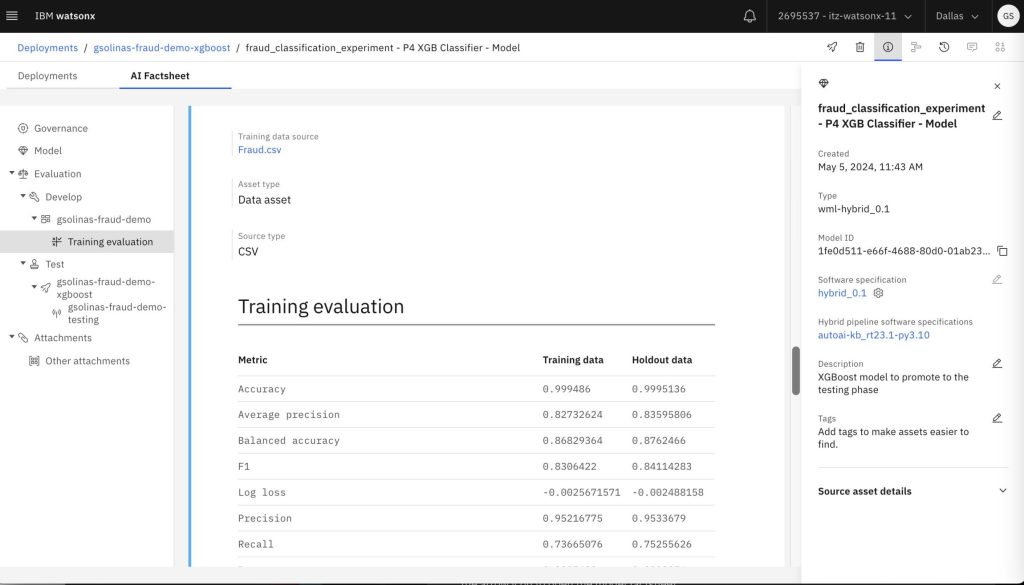

An dieser Stelle setzt das AI-Governance-Framework wieder ein, da alle relevanten technischen Informationen zu jeder Phase des Modelllebenszyklus – Entwicklung, Test, Deployment – im sogenannten AI Factsheet dokumentiert werden müssen. Dieses Dokument ergänzt den AI Use Case, der systematisch umfassender ist und Details zu Risiken und regulatorischen Anforderungen (beispielsweise DORA, DSGVO oder dem kommenden EU AI Act) enthält.

Die Einbettung technischer Modellmetriken in das AI Factsheet und deren Verknüpfung mit den Kontextinformationen aus dem AI Use Case bietet mehrere Vorteile. Sie verbessert die Erklärbarkeit von Machine-Learning-Modellen, da die Metriken transparent und konsistent dokumentiert sind. Diese Vorgehensweise unterstützt insbesondere bei neuen oder veränderten Datensätzen den Erhalt von “AI Fairness”, reduziert Verzerrungseffekte (“AI Bias”) und stärkt die Nachvollziehbarkeit.

Darüber hinaus verbessert dieser Ansatz das Risikomanagement. Ein kontinuierlich rückgekoppeltes Framework zwischen MLOps und AI-Governance fördert die Transparenz und ermöglicht eine kontinuierliche Bewertung. Risiken wie Modellabweichungen (Model Drifts) und weitere potenzielle Probleme können wirksam reduziert werden. Diese Form der stetigen Bewertung trägt dazu bei, dass Modelle zuverlässig bleiben und regulatorischen Standards entsprechen.

Einsatz von AI-Governance für Audits

Aus pragmatischer Sicht ist AI-Governance unverzichtbar, damit Auditoren die zugrunde liegende Architektur eines AI-Systems fundiert bewerten können. AI-Audits sind entscheidend, um die Einhaltung gesetzlicher Vorgaben sicherzustellen und Risiken im Zusammenhang mit KI-Anwendungen wirksam zu steuern. Solche Prüfungen beinhalten eine umfassende Bewertung der Regelkonformität – etwa mit der DSGVO oder dem EU AI Act – sowie die Analyse potenzieller Verzerrungen und sicherheitsrelevanter Schwachstellen. Durch kontinuierliche Überwachung und regelmäßige Audits lässt sich die Leistungsfähigkeit der Systeme sichern und auf neu auftretende Herausforderungen reagieren. Organisationen, die klare Verantwortlichkeiten definieren und robuste AI-Governance-Strukturen etablieren, können Risiken minimieren, ethische Standards wahren und das Vertrauen von Nutzern und Stakeholdern gezielt stärken.

Grundprinzipien der AI-Governance

Da sich der regulatorische Rahmen für Künstliche Intelligenz dynamisch weiterentwickelt und der Druck steigt, interne Governance-Strukturen zu etablieren, beginnen viele Unternehmen damit, entsprechende Prozesse und Rahmenwerke zu entwickeln. Dieser Weg kann anfangs komplex wirken. Eine schrittweise Herangehensweise hat sich daher in der Praxis bewährt. Ein möglicher Fahrplan zur Umsetzung kann so aussehen:

- Governance-Rollen definieren: Zuständigkeiten für die Überwachung von AI-Systemen klar zuweisen und unterschiedliche Stakeholder einbeziehen.

- Regelmäßige Audits planen: Prüfintervalle festlegen und externe Prüfer einbinden.

- Wirkungsanalysen durchführen: Datenschutz-Folgenabschätzungen (DPIA) nutzen, um Risiken zu identifizieren und zu dokumentieren.

- Transparenz gewährleisten: Nachvollziehbare Dokumentation zu Funktionsweise und Entscheidungslogik der eingesetzten AI bereitstellen.

- Menschliche Kontrolle sicherstellen: Bedienpersonal entsprechend schulen und klare Interventionsprozesse etablieren.

- Verantwortlichkeitsmetriken entwickeln: Leistungskennzahlen für AI-Systeme definieren und fortlaufend überwachen.

- Stakeholder einbinden: Beteiligte Interessengruppen aktiv in den Gestaltungsprozess einbeziehen.

- Berichte veröffentlichen: Regelmäßige Transparenzberichte zur Leistung und Governance der eingesetzten AI-Systeme publizieren.

Eine Unternehmenskultur, die auf Verantwortung, Transparenz und kontinuierliche Verbesserung setzt, schafft die Grundlage für vertrauenswürdige und zukunftsfähige AI-Lösungen.

Falls Sie Fragen haben, sind wir nur einen Klick entfernt.