Events, event streaming, real-time, event processing… Ok, now that we got your attention, let’s explore some common use cases for streaming applications.

Stream processing is used in cases where there is an event (e.g. card payment) happening and you need to react to that event as soon as possible (in near real time) and produce output like predicting if it is a fraudulent transaction or sending the customer a push notification on their mobile phone with balance information.

Banking, Telco, Automotive and Transport are some of the industries where a lot of use cases exist for stream processing:

- Integrating internal and external legacy and modern systems by using Kafka as an integration layer through which communication between systems flow

- Real-time monitoring of devices or applications unified through a single streaming platform

- User behavior analysis enables offering your customers the best possible service and support

- Proactive monitoring helps with the early detection of problematic patterns that might occur and eventually lead to errors in the system. Imagine how many supports center calls this might prevent

- Connected and autonomous cars wouldn’t be possible without streaming solutions in which thousands of sensor information is analyzed in real-time and important decisions are made

- Personalized recommendations show that you care about your customers through direct and personalized communication with custom-tailored offers

- Supply and inventory analysis in real-time can help your organization with optimizing your supply chain management

- Real-time KPI reports why to wait 24 hours for your ETLs to go through when you can keep track of some of your most important KPIs in real time

In all these use cases Kafka can play an important role, and it is very often our go-to solution as a complete stream processing platform.

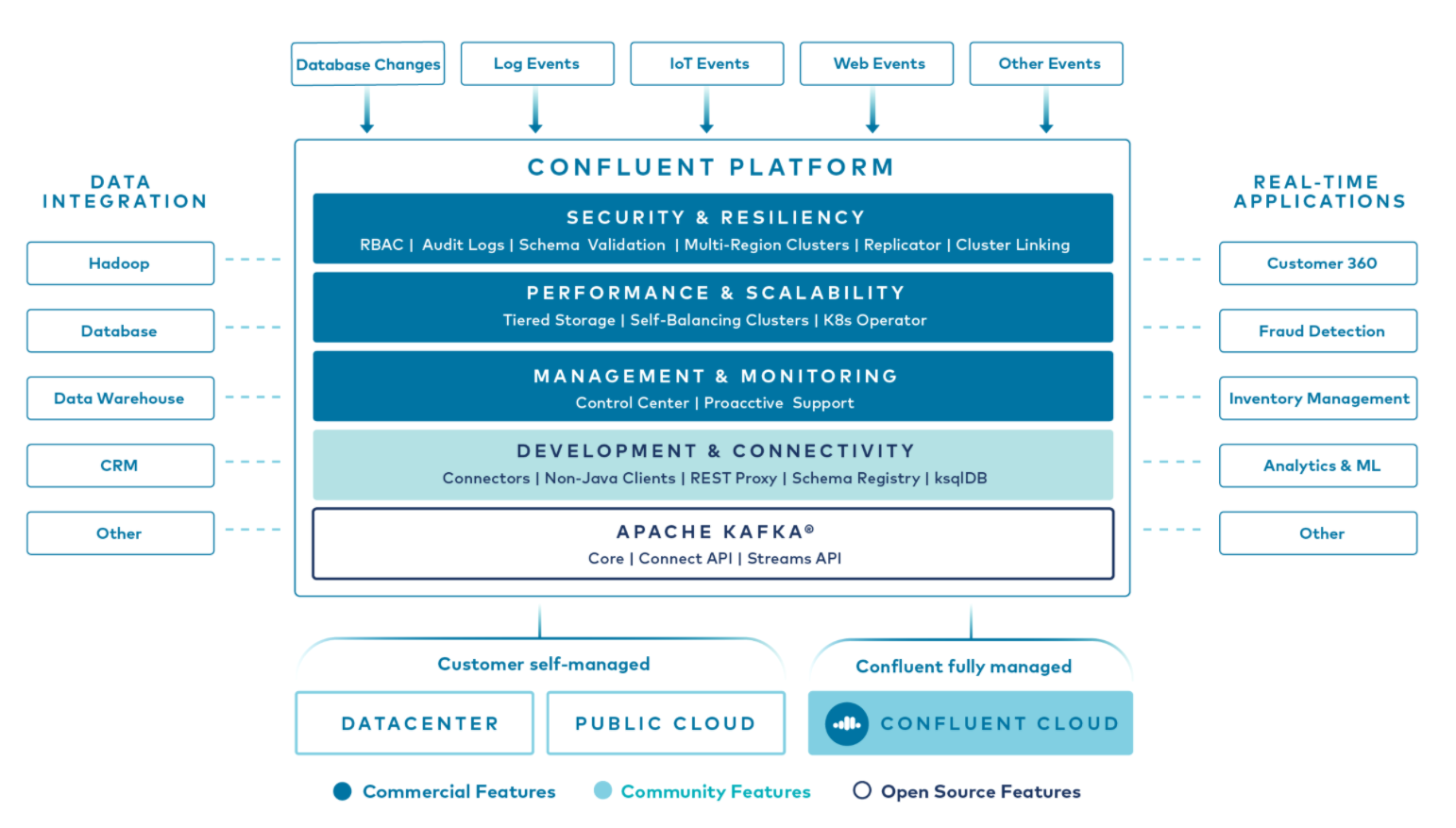

Apache Kafka is open-source, and it is mostly developed by Confluent which, besides contributing to open-source Apache Kafka, develops their streaming platform built on top of Kafka, called Confluent Platform.

Confluent Platform offers numerous features on top of Kafka that are built for enterprises that require proper security, multi-region clusters, monitoring, administration and integration with their on-prem and cloud systems.

We recognized the value of the Confluent Platform years ago and have since worked towards becoming a reliable Confluent partner by learning every part of the platform, educating and certifying our engineers, and implementing systems. The most recent milestone on our Confluent and Kafka journey was becoming a Plus level Confluent partner which we achieved this month.

With this partnership, we are looking forward to new challenges and opportunities in helping your organization overcome all obstacles on real-time stream processing journey.

For almost 10 years now, CROZ has been putting a lot of attention on developing streaming applications, helping customers benefit from reacting to events as soon as they happen. Throughout these 10 years we’ve been involved in various streaming projects, ranging from developing microservice architectures using event sourcing to modern event streaming architectures based on technologies like Apache Kafka, Apache Flink or Apache Spark. And since we have software developers, data engineers and system administrators, we can provide full stack implementation from setting up infrastructure (e.g. Kafka cluster), securing it, integrating the streaming platform with other systems in the organization to developing event streaming applications, advanced data analytics and AI.

To get more information about our services check our website and keep following our blogs for more technical and less technical topics.

Falls Sie Fragen haben, sind wir nur einen Klick entfernt.