The Problem

This article was first distributed in our 0800-DEVOPS newsletter.

If you’re interested in receiving interviews with thought leaders and a digest of exciting ideas from the world of DevOps straight to your inbox, subscribe to our 0800-DEVOPS newsletter!

For the past several years working as a DevOps engineer I’ve changed my moto from “CPU, RAM and disk storage is cheap – just buy me some more!” to “You are using too many resources, get back and see what’s the reason!”. Since I’m also a former backend developer I have the advantage of anticipating what could be wrong with resource-hungry Java-based apps. Again, a year or two ago I would probably say “ask for more resources”. But today we are no longer talking in terms of servers and VMs. We are talking about containers and pods running in the cloud. Cloud is vast and elastic but it also costs. And, as a consequence, resources are coming more and more in focus.

The approach of having a Tomcat server running several monolithic apps or an army of Spring Boot Jar monoliths that share the same VM is now, well, old school. Developing such apps is easy to a certain degree – we’re dealing with one codebase, debugging is easier and so on.

In the age of microservices, we are no longer talking about running tens of apps on one VM – we are talking about running hundreds of apps on one VM. If we ignore apps resources we can easily end up paying way to much to AWS, Azure, and others for apps that are for example not using 100% of CPU/RAM for 100% of their running time.

Imagine that you have 2GB of RAM and 2 vCPU and a developer needs to deploy an app that is not tested in a resource-constrained environment and you as a DevOps engineer, by default, enforce resource limit of 700MB of RAM and 1 vCPU. The developer has tested the app on their laptop and the app starts in 13 seconds. With resource limits, it starts in 60 seconds (values are based on my experience running Spring Boot apps on the OpenShift platform). That is roughly 6 times slower. Wow!

OK, you adjust checks to accommodate that slow startup and life goes on. A few days later you receive “Out Of Memory” or OOM alerts and eyebrows are way up high. You put out the fire by adding more resources – increasing the limit to 1GB. Everything works fine… until… You receive alerts like the app is frequently restarting because checks are receiving “Request Timeout”, or that clients using the app are facing slow to no response. What do you do? That one instance cannot handle the load so you start another instance. Math does not lie! You end up with one app that is consuming all the resources.

Fortunately, there are few enthusiasts and innovators that are determined to change the number of running apps for the same amount of resources without sacrificing the joy the developer is accustomed to using popular frameworks.

Quarkus

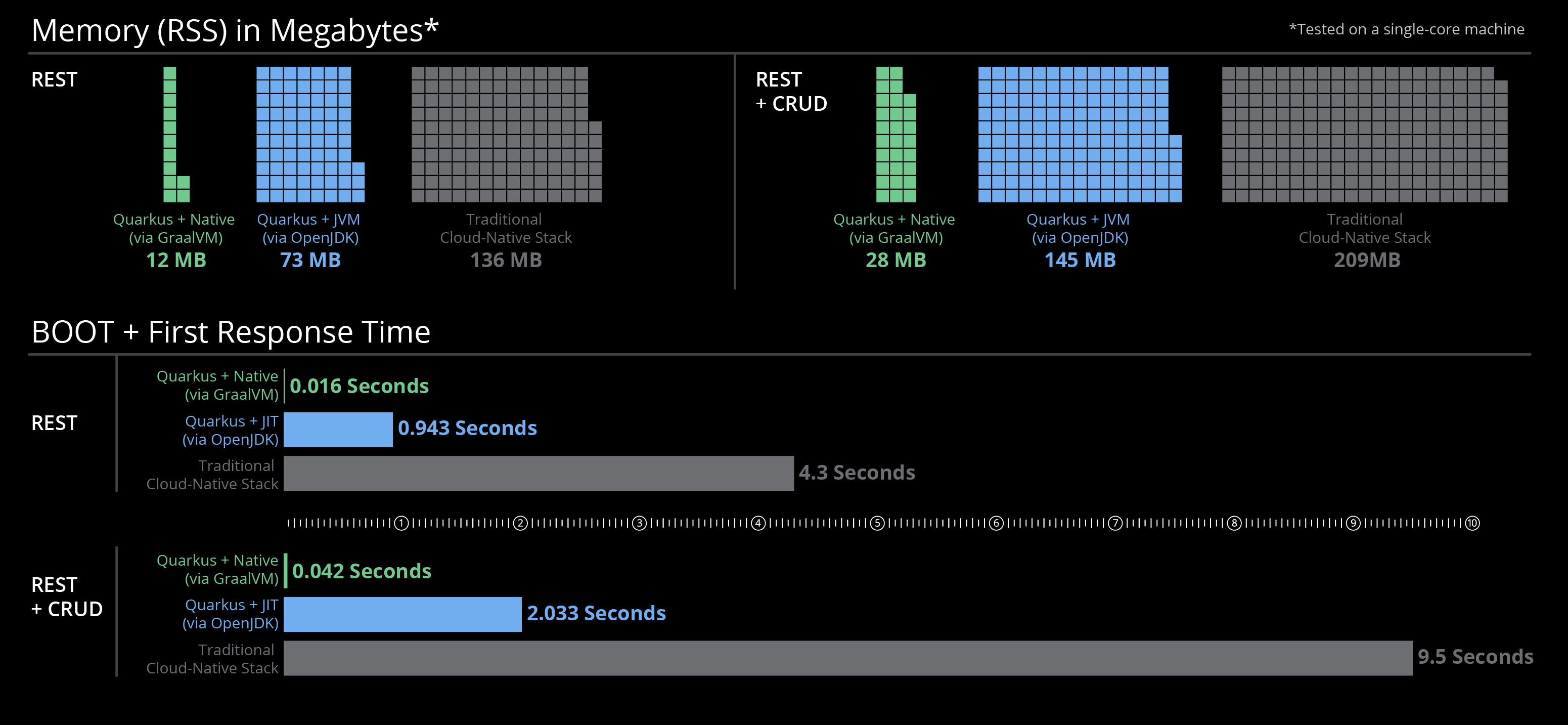

Quarkus.io team is one of those enthusiasts. Their focus is to create a “quark” that connects the best of Java libraries and standards into one Kubernetes Native Java stack. The idea is great, and the execution even better. With Quarkus you can run apps that use as low as 73MB and 145MB of RAM and still have an amazingly fast startup time. By using GraalVM instead of OpenJDK those numbers are even smaller (between 12 and 28MB of RAM) with startup time under a second! (more info on the nice image below).

Image source: https://quarkus.io/assets/images/quarkus_metrics_graphic_bootmem_wide.png

I must say that I’ve battle-tested Quarkus in production with early releases (native and non-native) and it’s awesome. If you are familiar with JavaEE then your learning curve is very short. Quarkus is combining Eclipse Vert.x, MicroProfile, Netty, RESTEasy, Apache Camel and many others and with that, it allows you to use the knowledge you already have to create native Java apps. Live-reload is always welcome, but with that fast startup time, you would not notice you are using it. It has a unique extension philosophy where the community can create new extensions and improve the existing ones.

So, a solution to the mentioned problem of being able to run only two apps with 2GB of RAM is here. With Quarkus, we can run 4-10 times more applications with the same resources. The only thing left though, is to encourage developers to try out this crazy new stuff. A few weeks ago Quarkus announced the official 1.0 version so no one can say “Dude, it’s not even version 1.x”.

Nice thing to know is that Quarkus is backed by Red Hat and that it is Open Source, just saying!

Another nice thing is start.spring.io like application starter https://code.quarkus.io/, go and give it a try!

Live long and prosper!

###

If you’re interested in all things DevOps subscribe to our 0800-DEVOPS newsletter!

You will receive in your inbox interviews with thought leaders and a digest of interesting ideas from the world of DevOps, technical practices and increased productivity!

We will never spam you, just share things we discuss internally in our CROZ DevOps Community of Practice. Join us!

Falls Sie Fragen haben, sind wir nur einen Klick entfernt.