Enterprise AI is moving to mission-critical use across industries and becoming part of how companies make decisions, support customers, manage operations, and create new digital services.

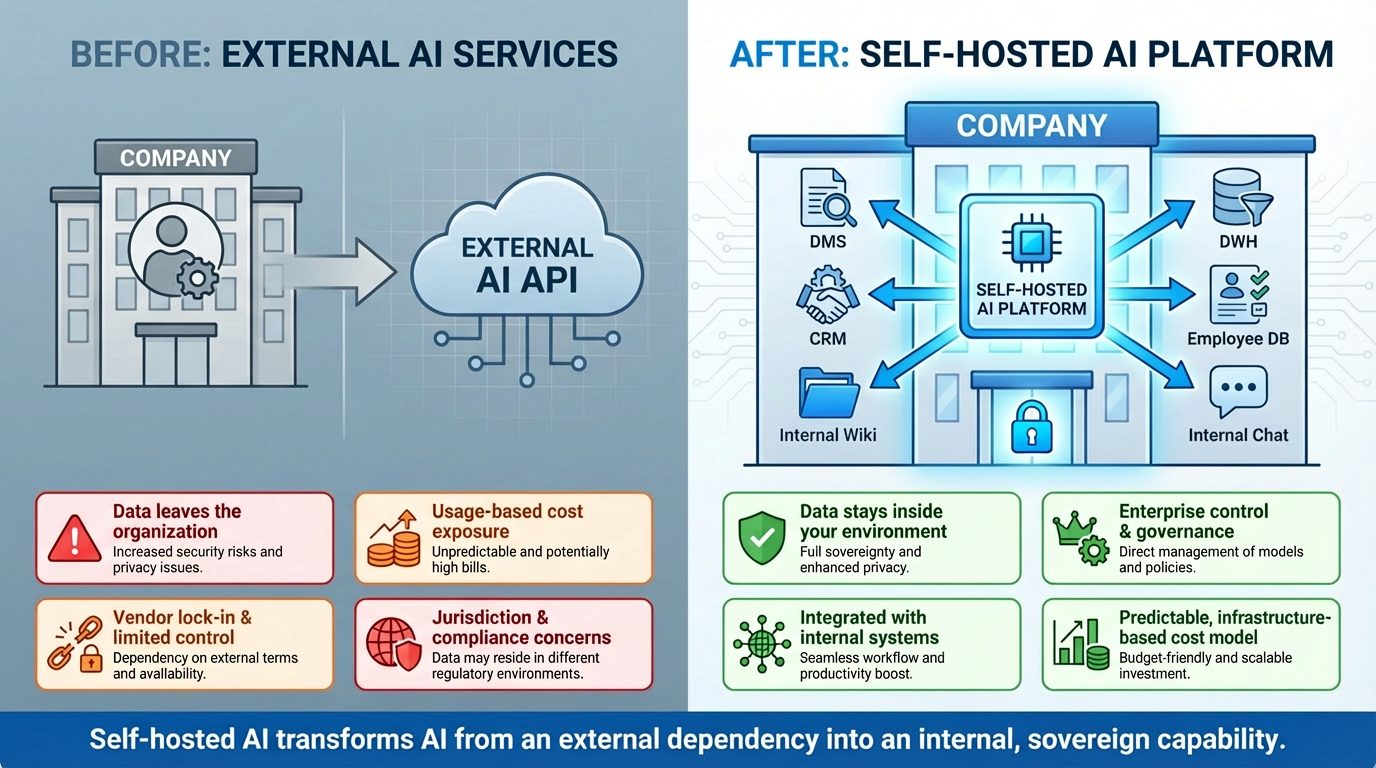

For many organizations, AI adoption relies on external cloud services and public large language model APIs. This is a natural starting point. It allows teams to experiment quickly without investing in infrastructure. But as AI starts touching sensitive data and core processes, the limitations of this public cloud model are becoming clear. Concerns about data exposure, vendor lock-in, unpredictable usage-based pricing, and cross-border legal risks impose serious limitations on AI implementation in businesses.

At CROZ, we think that the next phase of AI adoption is about bringing AI inside the organization and turning it into a controlled, governed, internal capability rather than a black-box service. That is where self-hosted Enterprise AI Platforms come in.

Why Self-Hosted Enterprise AI Platforms Are Becoming a Strategic Imperative

At a high level, self-hosted AI means that models, data, and AI agents run entirely on infrastructure controlled by the organization, such as on-premises hardware or a private cloud environment. This change in where AI runs has deep consequences for how AI is used and what risks it carries.

First, there is the question of data. When all inference happens inside your environment, sensitive information never needs to leave your perimeter. That makes it much easier to enforce internal policies, answer regulatory questions, and satisfy security teams. Instead of asking “which third party gets access to our data?” the answer becomes “only systems we already control.”

Cost is another key driver. Public AI APIs are typically priced per token, which feels manageable at the beginning but can scale exponentially as more teams start to rely on AI. For organizations that intend to use AI broadly across departments and use cases, continuing to send every request to an external provider can turn into an unpredictable operating expense. A self-hosted platform replaces that open-ended meter with a more stable infrastructure cost model, especially when combined with careful sizing and the right choice of models.

Self-hosting also brings the possibility for AI models to be adjusted to the business requirements, rather than forcing the business to adapt to an external service. Models can be adapted to internal terminology, internal processes, and internal knowledge sources. AI stops being generic and starts becoming something that understands how your company works.

Finally, internal deployment removes many of the limits around integration. AI Agents can safely talk to internal systems like your DMS, CRM, ERP, data warehouse, HR tools all without exposing their sensitive data to the outside world. That unlocks real AI automation and real productivity gains and removes a serious obstacle to AI adoption in business.

All of this adds up to a strategic shift: AI moves from being “something we call in the cloud” to “a capability we operate and govern ourselves.”

Digital Sovereignty as a Business Requirement

For European organizations, there is another dimension that cannot be ignored: digital sovereignty. Regulators, public institutions, and large enterprises want guarantees that their data, their workloads, and their AI systems are not only secure, but also under the right jurisdictional and operational control.

The European Commission’s Cloud Sovereignty Framework (CSF) formalizes a lot of these concerns. It defines a set of sovereignty objectives that cover more than just where data is stored. They touch on who has legal control, how independent the supply chain is, how operations are managed, how transparent the system is, and how resilient it will be in the long run.

In practice, that means cloud services and AI platforms are now evaluated not simply on technical features, but also on questions like:

- Who ultimately controls the infrastructure and software stack?

- Under which jurisdictions can data and systems be accessed?

- How much visibility does the customer have into the operations of the service?

- How easy is it to exit or change providers if needed?

The current “AI as a service” model, running on global hyperscale clouds, often struggles to give strong answers to these questions for sensitive workloads. Even when data residency is in the EU, the legal and operational dependencies may not be. For public sector, finance, healthcare, critical infrastructure, and many large enterprises, that is a serious concern.

A self-hosted AI platform deployed on infrastructure owned or fully controlled by the organization offers a much clearer sovereignty story. The data stays inside. The operational control stays inside. The choice of components can be made to avoid lock-in. And the overall architecture can be aligned with the CSF’s objectives in a measurable way.

This is the context in which we built Jarvis.

Introducing Jarvis. Our Self-Hosted Enterprise AI Platform

Jarvis is CROZ self-hosted Enterprise AI platform designed to bring security, sovereignty, cost control, and deep integration with all our internal systems into a single, coherent environment.

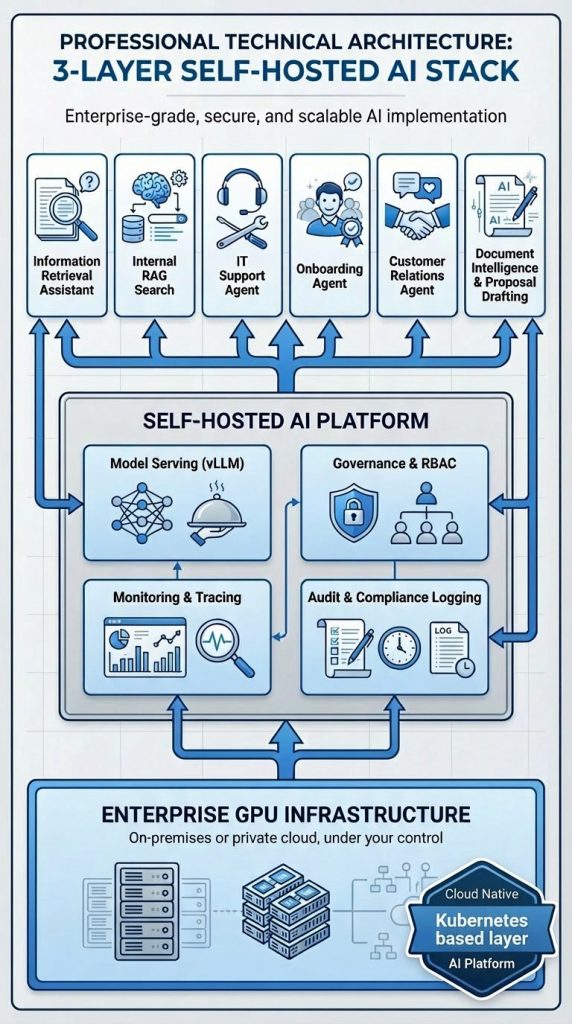

At its core, Jarvis provides a centralized AI platform that runs on our own GPU infrastructure. It is the internal “AI backbone” that powers agents, knowledge assistants, and automated workflows across our company.

One of the core strengths of Jarvis is its foundation on a Cloud Native Architecture. The platform uses a secure, scalable Kubernetes-based layer to manage and scale AI workloads. This provides robust lifecycle management for models and services, scalability, and secure multi-tenant cluster management. By building Jarvis on this foundation, we achieve a hardened, production‑ready platform with strong security, governance, and operational reliability

Jarvis also uses vLLM for high-performance model serving, giving the platform the ability to run modern large language models efficiently, serve multiple models in parallel, and scale as usage grows.

On top of this technical foundation, we have implemented governance, traceability and operational insight. We have integrated tracing of requests, detailed usage analytics, role-based access control, and comprehensive audit logging. These capabilities are essential for our organization since we want to use AI at scale and remain compliant with internal and external requirements.

Because Jarvis runs under our own jurisdiction, on our own hardware, it aligns naturally with the requirements of the EU Cloud Sovereignty Framework. Data and models never fall under foreign legal regimes, operations remain transparent, and the technology stack avoids hard lock-in to any single non-EU vendor. For our organization, Jarvis is a key building block for a strategic, long-term AI capability.

What Jarvis Already Delivers in Practice

Jarvis is not a slideware concept. We have already implemented a broad set of practical capabilities that demonstrate how self-hosted AI can transform everyday work inside the company.

One major area is deep integration with existing company systems. We have built agents that can interact with the Document Management System, GitLab, Internal Wiki documentation, and the employee database. Instead of each system being an isolated silo, Jarvis agents act as a unifying intelligence layer that can see across them, understand context, and assist users in more natural ways.

One key capability is our internal retrieval-augmented generation (RAG) system, which is designed with role-based access control. When the agent retrieves documents to support an answer, it only considers what the specific user or role is allowed to see. This keeps the experience powerful without compromising security or compliance. The result is a knowledge layer that understands both the content and the access model of our organization.

On top of that, we have a cross-system information retrieval assistant. This is an internal chat interface that can answer questions such as “What do we already know about customer X?”, “Where is the latest proposal for this product?”, or “What were the main decisions in last quarter’s planning meeting?” It does this by searching across Internal Wiki documentation, internal chats, the DMS, HR data, and other internal systems, then synthesizing the result into a meaningful answer. Information that used to be scattered across various systems becomes reachable in a single conversation thanks to underlying Enterprise AI Platform capabilities.

Our employees use Jarvis for document intelligence too. The platform helps in analyzing and processing any type of document without privacy concerns. Various sensitive documents like contracts, financial data, offerings, and similar can be used freely and without privacy concerns. For example, analyzing large amounts of tender documentation that is available in a foreign language. It can find similar documents using semantic search rather than just keyword matching, which is particularly useful when a quick response is needed. It can extract structured information from unstructured files, turning static documents into actionable data.

This is just the beginning, and we are constantly expanding our Jarvis Enterprise AI platform with new integrations, new AI agents, and new capabilities.

Bringing an AI Platform Into Your Organization

Delivering self-hosted Enterprise AI platform into an organization is not only a technical project, it is a strategic change. That’s why our work usually starts with understanding the business context and designing a roadmap rather than jumping straight to installations.

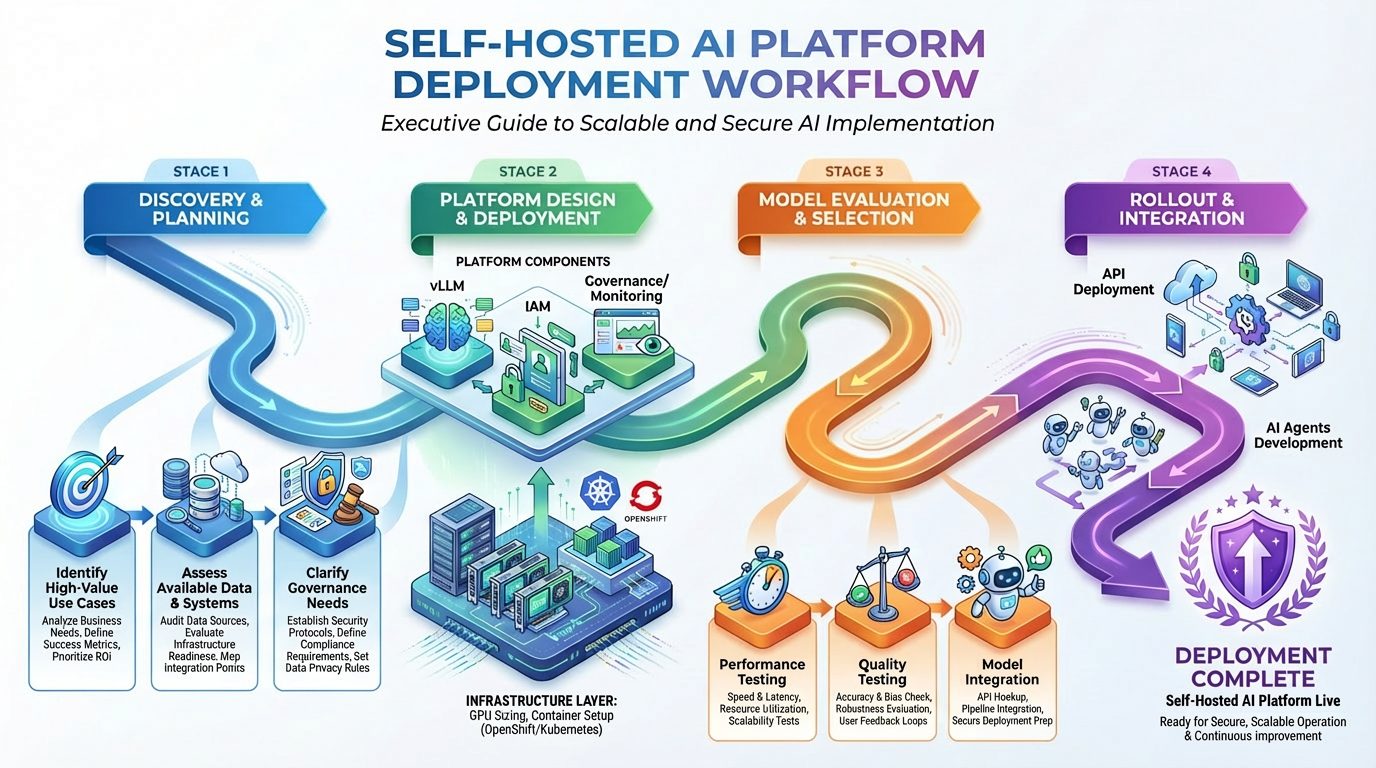

We begin by identifying high-value use cases, assessing available data and existing systems, and clarifying governance needs. This helps ensure that the initial deployment produces visible value quickly while also laying the foundation for future expansion.

We then design and deploy the platform by sizing GPU infrastructure, setting up container environment (OpenShift AI or Kubernetes and Kubeflow), configuring vLLM, integrating identity and access management, and establishing governance, monitoring, and traceability. Once the core enterprise AI platform is live, we move into model evaluation and selection by running model performance and quality tests. Once model integration is finished company can start rolling out the usage of models via API. In parallel development and rollout of AI agents that deeply integrate with company systems, can also start.

Finally, we offer ongoing support and managed services, covering upgrades, monitoring, tuning, model updates, and compliance reviews. The goal is not just to install a platform, but to help the organization grow its AI capability steadily and safely over time.

The Business Impact of Self-Hosted Enterprise AI Platform

When Enterprise AI Platform is in place and integrated with the organization’s systems, the impact shows up in several ways.

Employees spend less time searching for information and more time using it. Repetitive tasks like drafting, looking up data, and cross-checking documents become automated or accelerated. Decisions can be made faster, with better context. Support functions are more responsive because they can rely on AI agents for first-line interactions and information retrieval.

From a risk perspective, leadership gains confidence that sensitive data does not leave the organization, that AI usage is traceable and governed, and that future regulatory changes can be met from a position of control rather than dependence.

Financially, as usage grows, the economics of a self-hosted platform become increasingly attractive compared to paying per interaction to external services. The organization is building an asset it owns instead of feeding an external meter.

Strategically, a sovereign, internal AI capability becomes a differentiator. It allows companies to innovate on top of their own data and processes, without waiting for generic features from global platforms. It also means that core competencies remain internal, rather than being outsourced to third parties.

AI Belongs Inside Your Walls!

Public cloud AI services showed what is possible with AI. The next step is about making AI safe, sustainable, integrated with existing systems, and truly strategic. For many organizations, especially in Europe, and especially those handling sensitive data, that means moving from external AI as a service to an internal, self-hosted Enterprise AI Platform.

Jarvis is our answer to that need. A sovereign, secure, and flexible AI platform that runs on companies own infrastructure, aligns with EU digital sovereignty principles, and already powers real agents and workflows across key business systems.

>> Why Work with Us? <<

Our team brings proven expertise in building enterprise AI platforms with comprehensive experience spanning OpenShift AI, Kubernetes, vLLM, ClearML, and GPU infrastructure.

We possess a deep understanding of compliance requirements, ensuring our Enterprise AI Platform solutions meet the highest regulatory standards.

We partner with the most prominent vendors in Enterprise AI Nvidia, Mistral, IBM, RedHat, and ClearML.

Our capabilities are anchored by Jarvis, a fully developed reference AI platform that demonstrates our technical maturity and operational readiness.

Falls Sie Fragen haben, sind wir nur einen Klick entfernt.