Introduction

In the last blog we explored the effects of different GPU parallelism(sharding) strategies and effects of setting max batch size for parallel sequence processing.

In this text we continue our series of tunning vLLM runtime for high loads.

For the purpose of benchmarking, we used the same hardware as before. A single server node with 8 GPUs NVIDIA A100 SXM4 80 GB.

Also, we used the same deepseek-ai/DeepSeek-R1-Distill-Llama-70B model which is a model distilled from DeepSeek-R1 but based on meta-llama/Llama-3.3-70B-Instruct model and it has following characteristics:

- Parameter number – 70.6B parameters

- Parameter precision (Precision) – BF16 (bfloat16) == 2 bytes

- Number of layers (L) – 80 layers

- Hidden dimension (H) – 8192

- Grouped Query Attention mechanism

- (n_kv_heads) 8 KV Heads

- (n_q_heads) 64 Query Heads

- Max context length (max_position_embeddings) 131072 tokens

For the benchmark test we used datasets that we constructed ourselves, and we made them publicly available on our HuggingFace profile page.

Benchmark tests were performed with benchmark_serving.py script that we refactored to support 2 additional parameters (min-tokens and max-tokens). Each test was performed locally on a server node hosting the model.

In our tests, we used vLLM v0.7.3. with V0 engine.

One token at a time is too slow

vLLM’s scheduling component is a critical element that manages the flow of requests during LLM inference. At its core, vLLM employs iteration-level scheduling (continuous batching), which constructs a batch after each model forwarding iteration rather than waiting for all requests in a batch to finish as in request-level scheduling. This approach significantly improves GPU utilization by allowing new requests to join the batch at any iteration. A key enhancement introduced in vLLM v0.6.0 is multi-step scheduling, controlled by the –num-scheduler-steps parameter, which performs scheduling and input preparation once for multiple consecutive model forward steps without interrupting the GPU. Setting higher values for –num-scheduler-steps (typically between 10-15) spreads CPU overhead across multiple iterations, reducing GPU idle time and improving throughput.

Another technique for increasing the number of generated tokens per one iteration is speculative decoding but it is not compatible with num-scheduler-steps and both cannot be used at the same time. We will test speculative decoding performance another time.

Tests:

We performed 4 test runs on a sampled set of 200 sequences with lengths between 100 and 32K tokens.

All tests were run with benchmark_serving.py set to –max-concurrency 100 –request-rate 100.

We have tested 4 different values set to –num-scheduler-steps. Respectively 1, 8, 16, 32.

This test run was conducted on a single sequence with benchmark_serving.py parameters set to –max-concurrency 1 –request-rate 1. We have tested 4 different values set to –num-scheduler-steps. Respectively 1, 8, 16, 32.

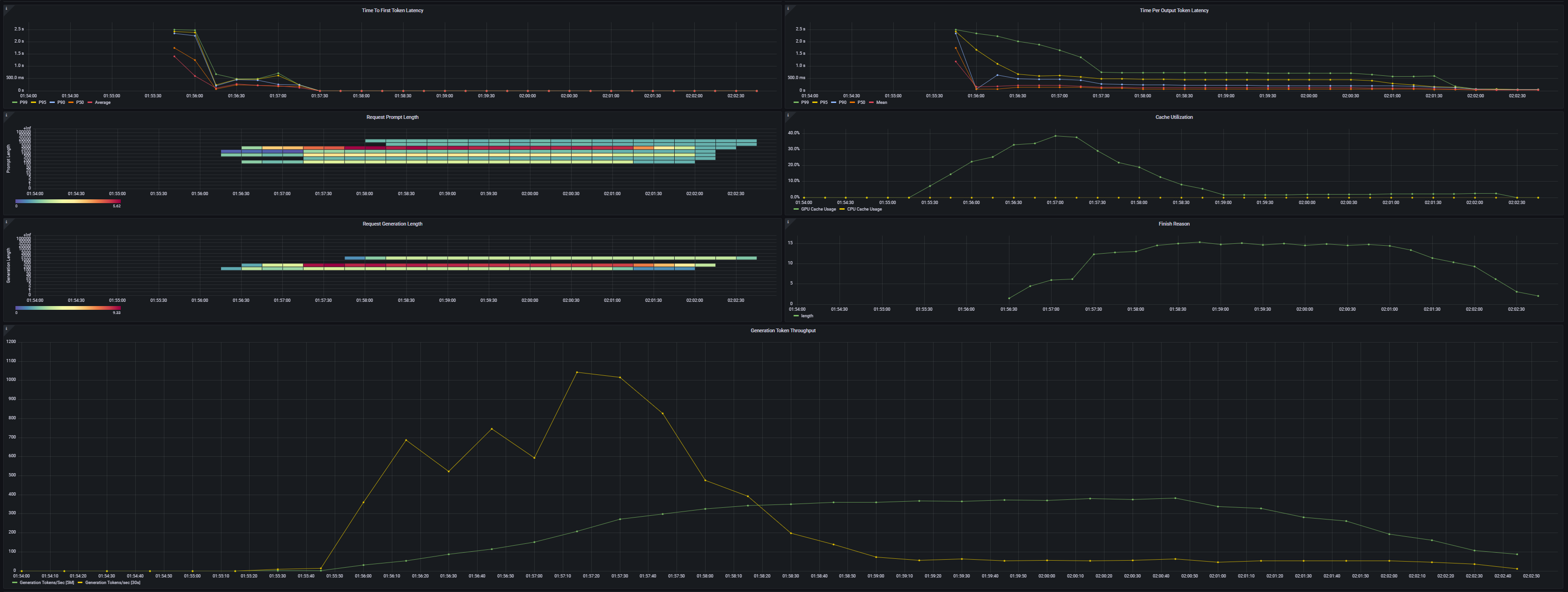

First test run with –num-scheduler-steps=1

command: --model=deepseek-ai/DeepSeek-R1-Distill-Llama-70B --trust-remote-code --device=cuda --disable-log-requests --gpu-memory-utilization=0.95 --tensor-parallel-size=8 --max-model-len=131072 --max-num-batched-tokens=98304 --enable-chunked-prefill --num-scheduler-steps=1 ============ Serving Benchmark Result ============ Successful requests: 200 Benchmark duration (s): 451.39 Total input tokens: 576043 Total generated tokens: 121960 Request throughput (req/s): 0.44 Output token throughput (tok/s): 270.19 Total Token throughput (tok/s): 1546.35 ---------------Time to First Token---------------- Mean TTFT (ms): 9677.00 Median TTFT (ms): 4276.29 P25 TTFT (ms): 324.65 P75 TTFT (ms): 19775.17 P99 TTFT (ms): 29410.84 -----Time per Output Token (excl. 1st token)------ Mean TPOT (ms): 127.20 Median TPOT (ms): 132.57 P25 TPOT (ms): 82.10 P75 TPOT (ms): 162.08 P99 TPOT (ms): 231.83 ---------------Inter-token Latency---------------- Mean ITL (ms): 92.12 Median ITL (ms): 66.16 P25 ITL (ms): 38.72 P75 ITL (ms): 72.06 P99 ITL (ms): 571.02 ----------------End-to-end Latency---------------- Mean E2EL (ms): 65761.05 Median E2EL (ms): 57516.26 P25 E2EL (ms): 37496.89 P75 E2EL (ms): 87717.69 P99 E2EL (ms): 187088.09 ==================================================

Second test run with –num-scheduler-steps=8

command: --model=deepseek-ai/DeepSeek-R1-Distill-Llama-70B --trust-remote-code --device=cuda --disable-log-requests --gpu-memory-utilization=0.95 --tensor-parallel-size=8 --max-model-len=131072 --max-num-batched-tokens=98304 --enable-chunked-prefill --num-scheduler-steps=8 ============ Serving Benchmark Result ============ Successful requests: 200 Benchmark duration (s): 417.31 Total input tokens: 576043 Total generated tokens: 120906 Request throughput (req/s): 0.48 Output token throughput (tok/s): 289.73 Total Token throughput (tok/s): 1670.12 ---------------Time to First Token---------------- Mean TTFT (ms): 9191.33 Median TTFT (ms): 4706.58 P25 TTFT (ms): 749.80 P75 TTFT (ms): 19822.95 P99 TTFT (ms): 29975.90 -----Time per Output Token (excl. 1st token)------ Mean TPOT (ms): 122.71 Median TPOT (ms): 125.81 P25 TPOT (ms): 78.90 P75 TPOT (ms): 159.22 P99 TPOT (ms): 221.59 ---------------Inter-token Latency---------------- Mean ITL (ms): 88.16 Median ITL (ms): 48.10 P25 ITL (ms): 31.53 P75 ITL (ms): 64.53 P99 ITL (ms): 725.43 ----------------End-to-end Latency---------------- Mean E2EL (ms): 62397.53 Median E2EL (ms): 55260.64 P25 E2EL (ms): 35242.21 P75 E2EL (ms): 84943.78 P99 E2EL (ms): 154904.74 ==================================================

Third test run with –num-scheduler-steps=16

command: --model=deepseek-ai/DeepSeek-R1-Distill-Llama-70B --trust-remote-code --device=cuda --disable-log-requests --gpu-memory-utilization=0.95 --tensor-parallel-size=8 --max-model-len=131072 --max-num-batched-tokens=98304 --enable-chunked-prefill --num-scheduler-steps=16 ============ Serving Benchmark Result ============ Successful requests: 200 Benchmark duration (s): 435.86 Total input tokens: 576043 Total generated tokens: 130076 Request throughput (req/s): 0.46 Output token throughput (tok/s): 298.44 Total Token throughput (tok/s): 1620.07 ---------------Time to First Token---------------- Mean TTFT (ms): 9762.52 Median TTFT (ms): 6548.22 P25 TTFT (ms): 1440.08 P75 TTFT (ms): 20242.90 P99 TTFT (ms): 30784.32 -----Time per Output Token (excl. 1st token)------ Mean TPOT (ms): 121.23 Median TPOT (ms): 126.62 P25 TPOT (ms): 77.82 P75 TPOT (ms): 156.89 P99 TPOT (ms): 217.45 ---------------Inter-token Latency---------------- Mean ITL (ms): 83.17 Median ITL (ms): 48.05 P25 ITL (ms): 29.19 P75 ITL (ms): 62.48 P99 ITL (ms): 756.34 ----------------End-to-end Latency---------------- Mean E2EL (ms): 63770.08 Median E2EL (ms): 56241.90 P25 E2EL (ms): 35615.35 P75 E2EL (ms): 81408.31 P99 E2EL (ms): 183133.09 ==================================================

Fourth test run with –num-scheduler-steps=32

command: --model=deepseek-ai/DeepSeek-R1-Distill-Llama-70B --trust-remote-code --device=cuda --disable-log-requests --gpu-memory-utilization=0.95 --tensor-parallel-size=8 --max-model-len=131072 --max-num-batched-tokens=98304 --enable-chunked-prefill --num-scheduler-steps=32 ============ Serving Benchmark Result ============ Successful requests: 200 Benchmark duration (s): 247.75 Total input tokens: 576043 Total generated tokens: 110203 Request throughput (req/s): 0.81 Output token throughput (tok/s): 444.81 Total Token throughput (tok/s): 2769.88 ---------------Time to First Token---------------- Mean TTFT (ms): 10650.93 Median TTFT (ms): 8176.03 P25 TTFT (ms): 3098.56 P75 TTFT (ms): 16123.45 P99 TTFT (ms): 33354.48 -----Time per Output Token (excl. 1st token)------ Mean TPOT (ms): 119.04 Median TPOT (ms): 125.47 P25 TPOT (ms): 77.49 P75 TPOT (ms): 160.34 P99 TPOT (ms): 212.94 ---------------Inter-token Latency---------------- Mean ITL (ms): 92.69 Median ITL (ms): 58.84 P25 ITL (ms): 36.21 P75 ITL (ms): 63.53 P99 ITL (ms): 995.24 ----------------End-to-end Latency---------------- Mean E2EL (ms): 61634.45 Median E2EL (ms): 56020.97 P25 E2EL (ms): 36050.28 P75 E2EL (ms): 80517.57 P99 E2EL (ms): 161680.31 ==================================================

Conclusion:

The benchmarking results indicate that increasing the num-scheduler-steps value yields overall better performance, especially regarding inter-token latency (ITL). Comparing the baseline setting (num-scheduler-steps=1) with the best-performing setting (num-scheduler-steps=32), the median inter-token latency improved significantly by approximately 11.06%, despite a slight degradation (0.62%) in the mean ITL, suggesting more consistent and stable latency distributions.

The time per output token (TPOT) also improved moderately, with mean and median enhancements of 6.42% and 5.36%, respectively. However, a notable trade-off is observed in the time to first token (TTFT), with mean and median TTFT increasing by about 10.06% and 91.19%, respectively, indicating a significant initial latency cost.

Considering this balance, we conclude that using num-scheduler-steps=16 is optimal, providing improved inter-token and output token latencies while moderating the increase in initial response latency.

Prefix caching

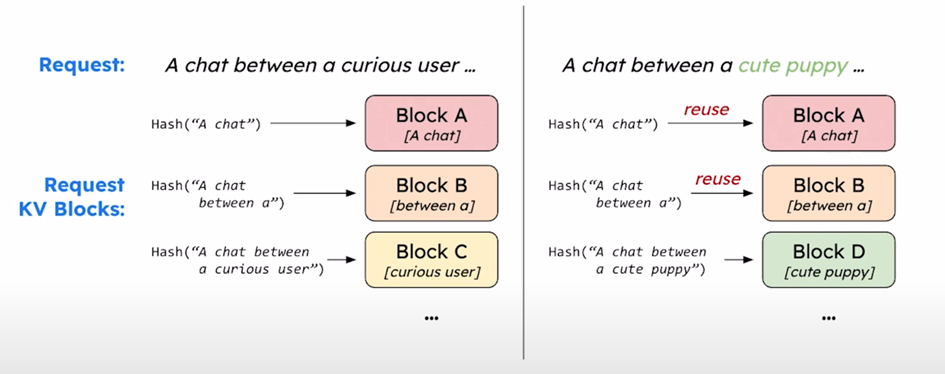

Prefix caching in vLLM’s Paged Attention implementation employs a hash-based approach to optimize memory usage and reduce computational overhead. The Paged Attention mechanism partitions the key-value (KV) cache into distinct logical blocks, with each block uniquely identified by both its contained tokens and the tokens in the prefix preceding it. When a new sequence shares a prefix with previous ones, vLLM can directly reuse the cached KV blocks instead of recomputing them.

This is accomplished through the hashing of KV blocks. This approach enables blocks sharing the same hash value (such as shared prefixes across different requests) to be mapped to the same physical block, eliminating memory fragmentation and reducing redundant computations. The feature can be enabled by setting –enable-prefix-caching in the vLLM engine configuration.

Tests:

We performed 2 test runs on a sampled set of 200 sequences with lengths between 100 and 32K tokens.

All tests were run with benchmark_serving.py set to –max-concurrency 100 –request-rate 100. Basically, we allow all 100 sequences to be processed in parallel. In the first run prefix caching was disabled. In the second run it was enabled.

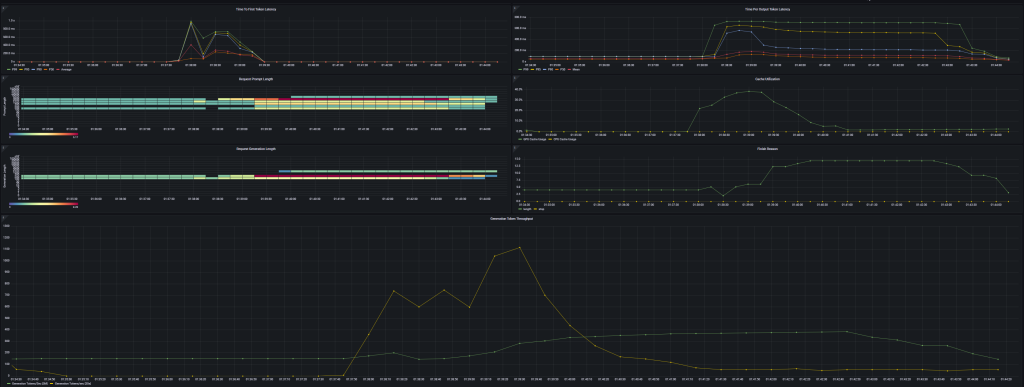

The first test run with prefix caching disabled.

command: --model=deepseek-ai/DeepSeek-R1-Distill-Llama-70B --trust-remote-code --device=cuda --disable-log-requests --gpu-memory-utilization=0.95 --tensor-parallel-size=8 --max-model-len=131072 --max-num-batched-tokens=98304 --enable-chunked-prefill --num-scheduler-steps=16 --enable-prefix-caching=False ============ Serving Benchmark Result ============ Successful requests: 200 Benchmark duration (s): 418.44 Total input tokens: 576043 Total generated tokens: 121122 Request throughput (req/s): 0.48 Output token throughput (tok/s): 289.46 Total Token throughput (tok/s): 1666.11 ---------------Time to First Token---------------- Mean TTFT (ms): 9784.81 Median TTFT (ms): 6548.23 P25 TTFT (ms): 1434.27 P75 TTFT (ms): 20340.68 P99 TTFT (ms): 30884.61 -----Time per Output Token (excl. 1st token)------ Mean TPOT (ms): 121.24 Median TPOT (ms): 126.38 P25 TPOT (ms): 77.74 P75 TPOT (ms): 156.59 P99 TPOT (ms): 218.04 ---------------Inter-token Latency---------------- Mean ITL (ms): 87.59 Median ITL (ms): 54.01 P25 ITL (ms): 32.46 P75 ITL (ms): 62.95 P99 ITL (ms): 802.76 ----------------End-to-end Latency---------------- Mean E2EL (ms): 62743.91 Median E2EL (ms): 56229.67 P25 E2EL (ms): 35580.18 P75 E2EL (ms): 81381.11 P99 E2EL (ms): 181242.39 ==================================================

The second test run with prefix caching enabled.

command: --model=deepseek-ai/DeepSeek-R1-Distill-Llama-70B --trust-remote-code --device=cuda --disable-log-requests --gpu-memory-utilization=0.95 --tensor-parallel-size=8 --max-model-len=131072 --max-num-batched-tokens=98304 --enable-chunked-prefill --num-scheduler-steps=16 --enable-prefix-caching ============ Serving Benchmark Result ============ Successful requests: 200 Benchmark duration (s): 400.03 Total input tokens: 576043 Total generated tokens: 121201 Request throughput (req/s): 0.50 Output token throughput (tok/s): 302.98 Total Token throughput (tok/s): 1742.99 ---------------Time to First Token---------------- Mean TTFT (ms): 5467.44 Median TTFT (ms): 4199.09 P25 TTFT (ms): 1362.89 P75 TTFT (ms): 12196.01 P99 TTFT (ms): 12701.14 -----Time per Output Token (excl. 1st token)------ Mean TPOT (ms): 104.08 Median TPOT (ms): 111.72 P25 TPOT (ms): 71.99 P75 TPOT (ms): 130.72 P99 TPOT (ms): 161.46 ---------------Inter-token Latency---------------- Mean ITL (ms): 79.00 Median ITL (ms): 54.28 P25 ITL (ms): 34.16 P75 ITL (ms): 64.57 P99 ITL (ms): 749.00 ----------------End-to-end Latency---------------- Mean E2EL (ms): 53263.45 Median E2EL (ms): 44556.97 P25 E2EL (ms): 29408.98 P75 E2EL (ms): 69701.58 P99 E2EL (ms): 145387.67 ==================================================

Conclusion:

Our test results show that enabling prefix caching leads to notable performance improvements across multiple metrics. The mean Time to First Token (TTFT) decreased by approximately 44.1% (from 9784.81 ms to 5467.44 ms), while the median TTFT improved by around 35.9% (from 6548.23 ms to 4199.09 ms). Additionally, the end-to-end latency showed a significant reduction, with the mean decreasing by 15.1% and the median by 20.8%. Other key latency metrics such as Time per Output Token (TPOT) and Inter-token Latency (ITL) also exhibited moderate improvements: mean TPOT dropped by 14.2%, and mean ITL by 9.8%.

These results demonstrate that enabling prefix caching can substantially enhance the responsiveness and throughput efficiency of the model in high-concurrency serving scenarios.

One downside of prefix caching is that vLLM currently uses GPU memory to store prefix cache. Ensure your system has sufficient resources to handle the additional memory load.

Falls Sie Fragen haben, sind wir nur einen Klick entfernt.