Blog

DataPower DevOps with pipeline example

17.04.2023

Is all your DataPower business logic saved securely in your code repository?

Can you easily track changes in your DataPower applications?

Can you repeatably deploy your DataPower applications in a few minutes in any of your environments?

If answers to some of the above-mentioned questions are negative and your team still does a lot of manual propagation of changes in your DataPower environments, this article can help you transform your DataPower development and maintenance into a robust and repeatable process.

DataPower applications and environments

We won’t go into too many details about IBM DataPower Gateway basics, but we can say that DataPower applications consist mostly of:

- DataPower objects containing logic and configuration, for example:

- Rules consisting of a list of actions that are performed on the incoming requests or the responses returned by the DataPower

- Match objects matching relative URLs of incoming requests

- Port numbers on which the DataPower services will listen for incoming requests

- DataPower files containing logic and configuration, saved in the

local:directory, for example:- XSL or GatewayScript files with custom logic

- XML or JSON files with configuration values for the environment

- Secrets used by DataPower objects and code, saved in the encrypted

certs:directory, for example:- Certificates

- Private keys

- Other secrets

In DataPower, we can separate multiple environments using DataPower domains. However, it is a good practice to use different appliances for production and non-production environments.

Development of DataPower applications usually consists of performing a set of changes in your development DataPower environment (maybe even a Docker container on your laptop) using the DataPower admin web UI. Some steps in this process can be sped up considerably using some tools, for example the DataPower Commander to copy multiple files and directories to an appliance or even syncing changes to XSLs and GatewayScripts on your local machine with the files on the appliance. (Please check other articles for more details about DataPower Commander) However, this process is usually performed directly on DataPower and it makes it harder to:

- Save configuration & code on the code repository (usually Git)

- Track changes in configuration & code

- Deploy changes to higher environments

There is a mechanism of deployment policiy that you can use to make the propagation of changes through environments easier but it still can’t answer all issues mentioned above.

A modern development and operation approach

We want to be able to develop and maintain DataPower applications similarly as we develop applications in other environments:

- Keep code and configuration in a code repository

- Keep secrets in a secrets storage

- Repeatably deploy applications to different environments using automated pipelines

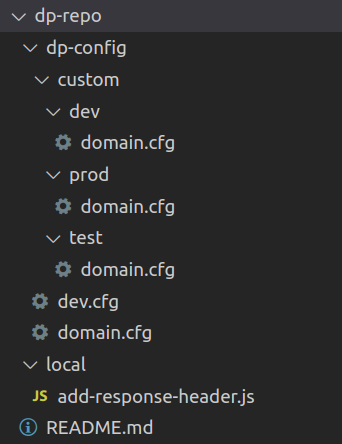

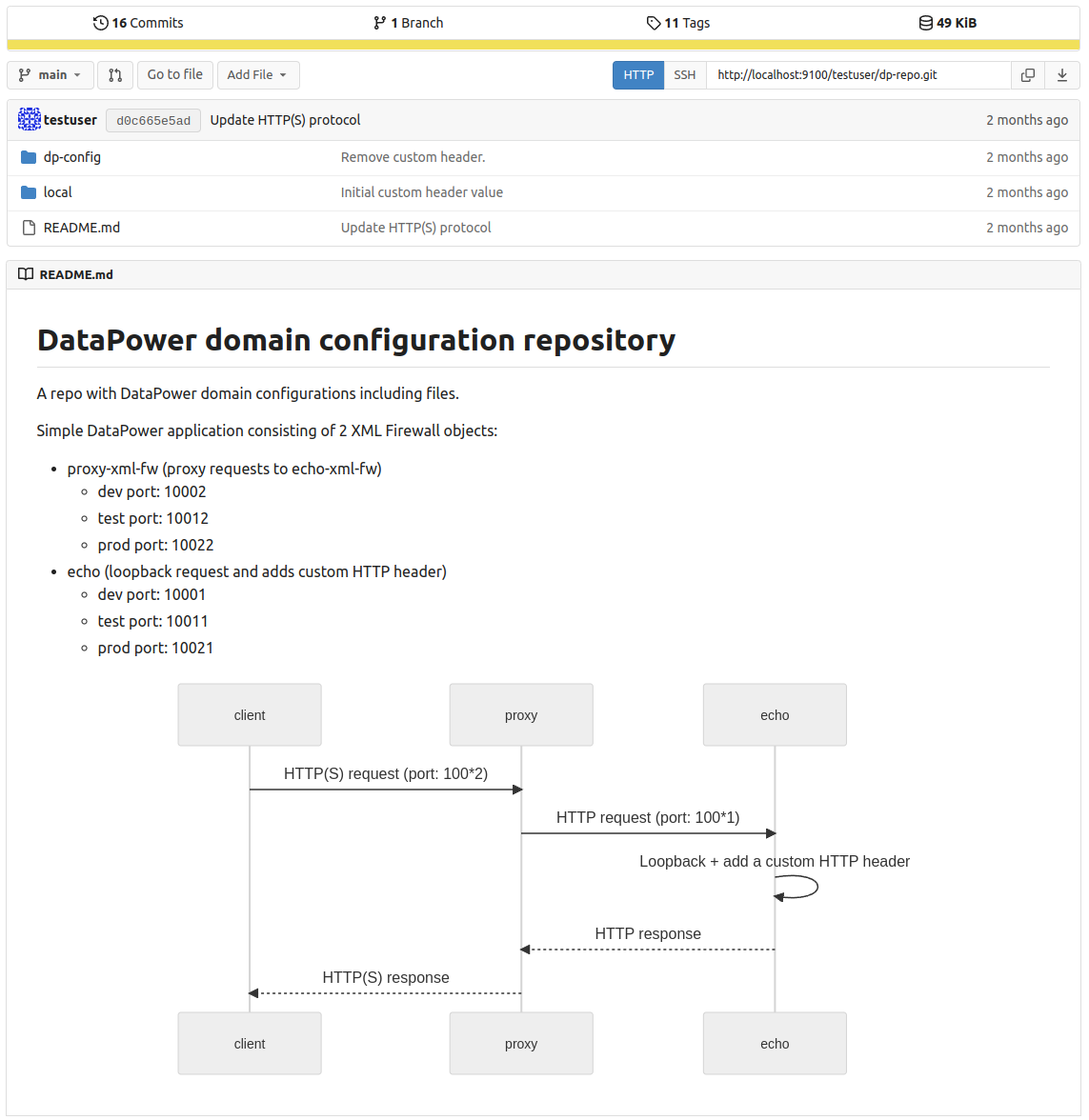

Example DataPower code repository

We will see that it is easy to apply this approach to your DataPower development and maintenance with just a little bit of discipline. This approach can make your DataPower application changes traceable and you can deploy them repeatably to any of your environments.

It is fine to use IDG (IBM DataPower Gateway) web UI, CLI, dpcmder or a similar tool to develop a DataPower solution by applying iterative changes and testing the behaviour on your development environment DataPower appliance. However, the same approach is not a good way to propagate changes to higher environments.

The proper way to maintain your IDG solution must ensure all changes are tracked through your code repository. For example, after you are satisfied with the changes prepared in your development IDG environment:

- Add all changes to the code repository, for example

- Pull the latest version of code from the code repository to the local machine

- Export/copy configuration from the IDG to the local machine

- Compare the latest code in the repo with changes pulled from the IDG and apply changes to the repo

- Update the environment-specific configurations if new values need to be added

- Commit changes to the code repo

- Run CD to the next environment (can make the first deployment to the dev environment to make sure everything is properly prepared)

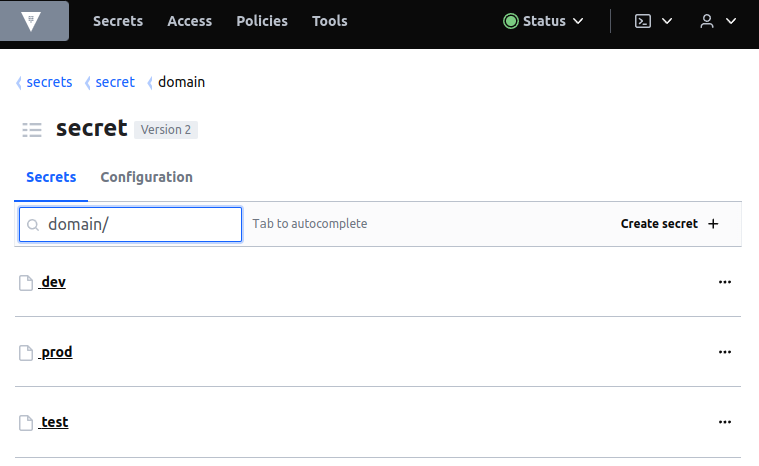

Example vault secrets - certificates and private keys for all environments

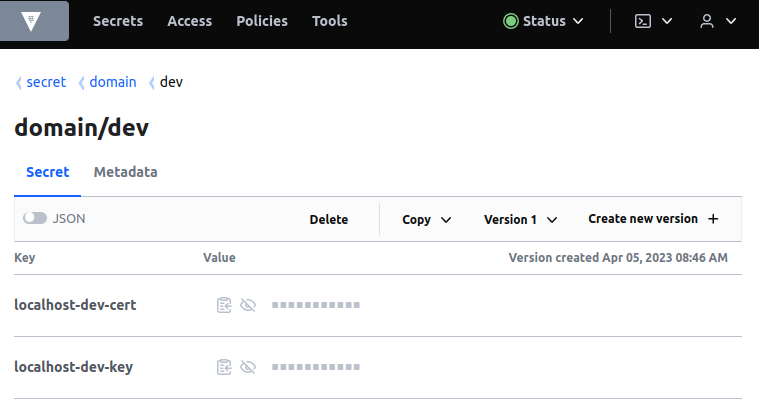

Example vault secrets - certificate and private key for dev environment

When we deploy an IDG application, usually we need to:

- Import certificates and private keys to the

certs:filestore - Import files to the

local:filestore - Import configuration (XML or CFG), for example:

- Import updated XML configuration or ZIP archive containing local files and updated XML configuration

- Upload the updated CFG file to the appliance and restart domain (causes downtime)

- Upload updated CFG file to the appliance and run exec command on that file

- Exec CFG file from a remote location

In case you keep XML configuration in your code repository make sure you run some kind of normalization and sorting after the export from the appliance to make comparisons of configurations and tracking of changes easier.

In this example, we used the CFG + exec approach because CFG files are less verbose and more human-readable and because it is straightforward to apply environmental customizations to the CFG files. Here is a short example of the excerpt of the CFG file for example application, copied from the dev environment:

top; configure terminal; # ... xmlfirewall "proxy-xml-fw" local-address "0.0.0.0" "10002" summary "a proxy XML Firewall Service" priority normal default-param-namespace "http://www.datapower.com/param/config" query-param-namespace "http://www.datapower.com/param/query" no force-policy-exec monitor-processing-policy terminate-at-first-throttle debugger-type internal debug-history 25 remote-address "127.0.0.1" "10001" xml-manager default stylesheet-policy proxy-xml-fw-policy max-message-size 0 request-type preprocessed response-type preprocessed request-attachments strip response-attachments strip root-part-not-first-action process-in-order front-attachment-format dynamic back-attachment-format dynamic mime-headers rewrite-errors delay-errors delay-errors-duration 1000 soap-schema-url "store:///schemas/soap-envelope.xsd" wsdl-response-policy off no firewall-parser-limits bytes-scanned 4194304 element-depth 512 attribute-count 128 max-node-size 33554432 forbid-external-references max-prefixes 1024 max-namespaces 1024 max-local-names 60000 attachment-byte-count 2000000000 attachment-package-byte-count 0 external-references forbid credential-charset protocol ssl-config-type server ssl-server proxy-xml-fw-server-profile exit # ...

If we want to apply customization of the XML Firewall “proxy-xml-fw” from the above excerpt, for example, to change the listener port the service listens to and to change the remote port the XML firewall service connects to, we just need to prepare the environment-specific CFG file and apply it after applying the base CFG file:

xmlfirewall "proxy-xml-fw" local-address "0.0.0.0" "10012" remote-address "127.0.0.1" "10011" exit

Example DataPower DevOps setup

Here is a detailed description of the DataPower DevOps setup. Building blocks required to set up a DataPower DevOps consists of main components and commands that help our pipeline to interact with DataPower, package repository and secrets storage:

- Main components used:

- IBM DataPower Gateway (one or more appliances we manage)

- Jenkins (pipeline build and deploy) – can be replaced with GitHub actions, GitLab CI/CD or some other solution

- Code repository (stores and track changes in code & configuration)

- HashiCorp Vault (secrets storage) – can be replaced with some other secret management

- Main commands used for deployment:

dpctl: a non-interactive command-line tool developed in our company that simplifies DataPower management – here are commands used in this exampledpctl domain delete: deletes domaindpctl domain create: creates domaindpctl file create: creates a file with given contents on a DataPower appliance (used for certs upload)dpctl file upload: uploads file or directory to a DataPower appliancedpctl action exec-config: runs a configuration scriptdpctl domain save: saves a domain

The process of setting up main components is out of the scope of this article but your organization probably already uses most of the main components needed for the process.

It is important that you make sure the above-mentioned commands are available to your pipeline and in the case of the example Jenkins solution that means that we needed to copy and/or install those commands to the Jenkins server. It could be achieved by running commands similar to:

vedran@my-laptop:~$ scp /usr/local/bin/dpctl vedran@jenkins.server:/usr/local/sbin/dpctl vedran@my-laptop:~$ ssh vedran@jenkins.server vedran@jenkins.server:~$ sudo apt install jq

Use your CI/CD system mechanism to save credentials your pipeline will need to connect to the following systems:

- IBM DataPower Gateway admin user

- Git user with access to your DataPower project

- Secrets store token

(In Jenkins, these are added through “Manage Credentials” settings)

After that, you can create a pipeline that can be used to build and deploy your DataPower application in different environments (different DataPower appliances and/or different domains).

Example DataPower applications

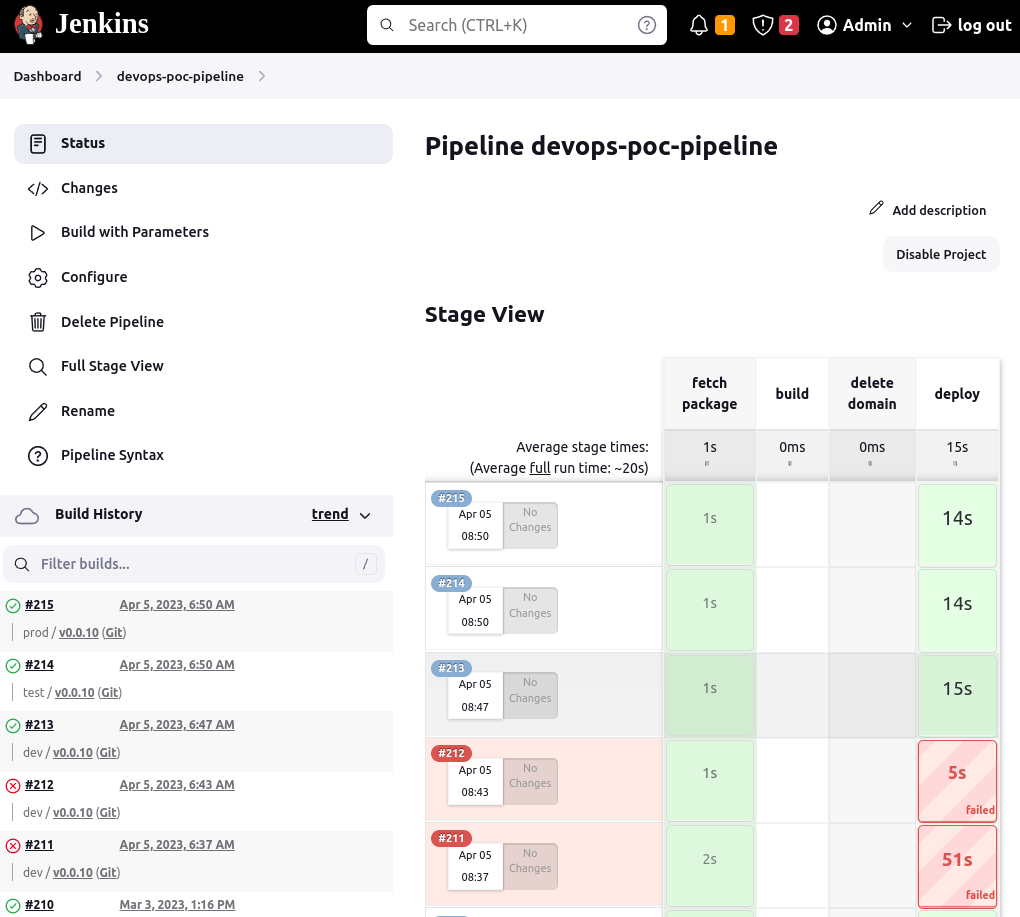

Example DataPower DevOps Jenkins pipeline

Here is an example of the Jenkins pipeline developed using the above-mentioned approach and applying it to different domains in a single IBM DataPower Gateway appliance:

def packageUrl = ''

def packageExists = true

def gitTag = 'v0.0.0'

node {

if("${GIT_TAG}" != "v0.0.0") {

gitTag = "${GIT_TAG}"

writeFile(file: 'git-tag.txt', text: "${GIT_TAG}")

}

else if(fileExists(file: 'git-tag.txt')) {

gitTag = readFile(file: 'git-tag.txt')

}

currentBuild.description = "${DOMAIN} / ${GIT_TAG} (Git)"

}

@NonCPS

def jsonToMap(jsonValue) {

def jsonSlurper = new groovy.json.JsonSlurper()

def mapValues = new java.util.HashMap(jsonSlurper.parseText(jsonValue))

return mapValues

}

pipeline {

agent any

parameters {

string(name: 'IDG_HOSTNAME', defaultValue: 'idg.my.domain', description: 'DataPower hostname')

string(name: 'VAULT_HOSTNAME', defaultValue: 'vault.my.domain', description: 'HashiCorp Vault hostname')

choice(name: 'DOMAIN', choices: ['test', 'dev', 'prod'], description: 'DataPower domain')

booleanParam(name: 'DELETE_DOMAIN', defaultValue: false, description: 'Delete domain before deploy')

string(name: 'GIT_TAG', defaultValue: "${gitTag}", description: 'Deploy a specific app version (git tag & package version).')

}

stages {

stage("fetch package") {

steps {

script {

packageUrl = "http://gitea.my.domain:3000/api/packages/testuser/generic/dp-repo/${GIT_TAG}/dp-repo-${GIT_TAG}.tgz"

}

echo "Fetching package ${packageUrl}"

sh "curl ${packageUrl} --output 'dp-repo-${GIT_TAG}.tgz'"

script {

def dpRepo = readFile(file: "dp-repo-${GIT_TAG}.tgz")

if(dpRepo == 'Package file does not exist' || dpRepo == 'Package does not exist') {

packageExists = false

}

else {

sh "tar -xvf dp-repo-${GIT_TAG}.tgz"

}

}

echo "Package exists: ${packageExists}"

}

}

stage("build") {

when { expression { !packageExists } }

steps {

echo "Cloning repository..."

git branch: 'main', credentialsId: 'git-user', url: 'http://gitea.my.domain:3000/testuser/dp-repo.git'

sh 'git checkout "${GIT_TAG}"'

echo "...repository cloned and prepared build."

sh 'tar -czvf dp-repo.tgz local dp-config'

echo "Archive dp-repo.tgz built."

sh 'ls -lh dp-repo.tgz'

withCredentials([usernamePassword(credentialsId: 'git-user', passwordVariable: 'GIT_PASS', usernameVariable: 'GIT_USER')]) {

sh "curl ${packageUrl} --upload-file dp-repo.tgz -XPUT -u ${GIT_USER}:${GIT_PASS}"

echo "Archive dp-repo.tgz uploaded to the remote pacage repo: '${packageUrl}'"

}

}

}

stage("delete domain") {

when { expression { params.DELETE_DOMAIN } }

steps {

echo "Starting deletion of domain: '${DOMAIN}'..."

withCredentials([usernamePassword(credentialsId: 'idg-user', passwordVariable: 'IDG_PASS', usernameVariable: 'IDG_USER')]) {

sh 'echo "y" | dpctl -s ${IDG_HOSTNAME} -u ${IDG_USER} -p ${IDG_PASS} -i domains delete ${DOMAIN}'

}

echo "...domain deleted: '${DOMAIN}'"

}

}

stage('deploy') {

steps {

echo "Starting deploy to domain: '${DOMAIN}'"

echo "Creating domain (if it doesn't exist): '${DOMAIN}'."

withCredentials([usernamePassword(credentialsId: 'idg-user', passwordVariable: 'IDG_PASS', usernameVariable: 'IDG_USER')]) {

sh 'dpctl -s ${IDG_HOSTNAME} -u ${IDG_USER} -p ${IDG_PASS} -i domains create ${DOMAIN}'

sh 'dpctl -s ${IDG_HOSTNAME} -u ${IDG_USER} -p ${IDG_PASS} -i domains save default'

}

echo "Creating local:///version file in domain: '${DOMAIN}'."

withCredentials([usernamePassword(credentialsId: 'idg-user', passwordVariable: 'IDG_PASS', usernameVariable: 'IDG_USER')]) {

sh 'dpctl -s ${IDG_HOSTNAME} -u ${IDG_USER} -p ${IDG_PASS} -i file create -domain ${DOMAIN} -contents "${GIT_TAG}" "local:/version"'

}

echo "Copy secrets from vault to domain: '${DOMAIN}'."

script {

def vaultSecretsJson = ''

withCredentials([string(credentialsId: 'vault-token', variable: 'VAULT_TOKEN')]) {

// Fetch secrets from Vault

vaultSecretsJson = sh (

script: 'curl -s -H "x-vault-token: ${VAULT_TOKEN}" "${VAULT_HOSTNAME}:8200/v1/secret/data/domain/${DOMAIN}" | jq ".data.data"',

returnStdout: true

)

}

def vaultSecrets = jsonToMap(vaultSecretsJson)

vaultSecrets.each { name, secret ->

withCredentials([usernamePassword(credentialsId: 'idg-user', passwordVariable: 'IDG_PASS', usernameVariable: 'IDG_USER')]) {

wrap([$class: 'MaskPasswordsBuildWrapper', varPasswordPairs: [[password: secret]]]) {

withEnv(["name=${name}", "secret=${secret}"]) {

echo "Create secret '${name}' in domain: '${DOMAIN}'."

sh (

script: '''

# Don't show command (secret) in Jenkins log

set +x

dpctl -s ${IDG_HOSTNAME} -u ${IDG_USER} -p ${IDG_PASS} -i file create -domain ${DOMAIN} -contents "${secret}" "cert:/${name}"

''',

label: "Create secret ${name}"

)

}

}

}

}

}

withCredentials([usernamePassword(credentialsId: 'idg-user', passwordVariable: 'IDG_PASS', usernameVariable: 'IDG_USER')]) {

echo "Copy local files to domain: '${DOMAIN}'."

sh 'dpctl -s ${IDG_HOSTNAME} -u ${IDG_USER} -p ${IDG_PASS} -i file upload -domain ${DOMAIN} -d -from ./local -to local:'

// We need to comment out the first line with "top; configure terminal;" before we can run "action exec-config" on that config

echo "Preparing config file ./tmp.cfg for domain: '${DOMAIN}'."

sh 'cp ./dp-config/domain.cfg ./tmp.cfg'

sh 'sed -i "s/top; configure terminal;/# top; configure terminal;/" ./tmp.cfg'

sh 'cat ./dp-config/custom/${DOMAIN}/domain.cfg >> ./tmp.cfg'

echo "Copy config file ./tmp.cfg to domain: '${DOMAIN}' temporary:/domain.cfg."

sh 'dpctl -s ${IDG_HOSTNAME} -u ${IDG_USER} -p ${IDG_PASS} -i file upload -domain ${DOMAIN} -f -from ./tmp.cfg -to temporary:/domain.cfg'

echo "Applying temporary:/domain.cfg to domain: '${DOMAIN}'."

sh 'dpctl -s ${IDG_HOSTNAME} -u ${IDG_USER} -p ${IDG_PASS} -i action exec-config -domain ${DOMAIN} temporary:/domain.cfg'

echo "Saving domain: '${DOMAIN}'."

sh 'dpctl -s ${IDG_HOSTNAME} -u ${IDG_USER} -p ${IDG_PASS} -i domain save ${DOMAIN}'

}

}

}

}

}

Example Jenkins DataPower pipeline

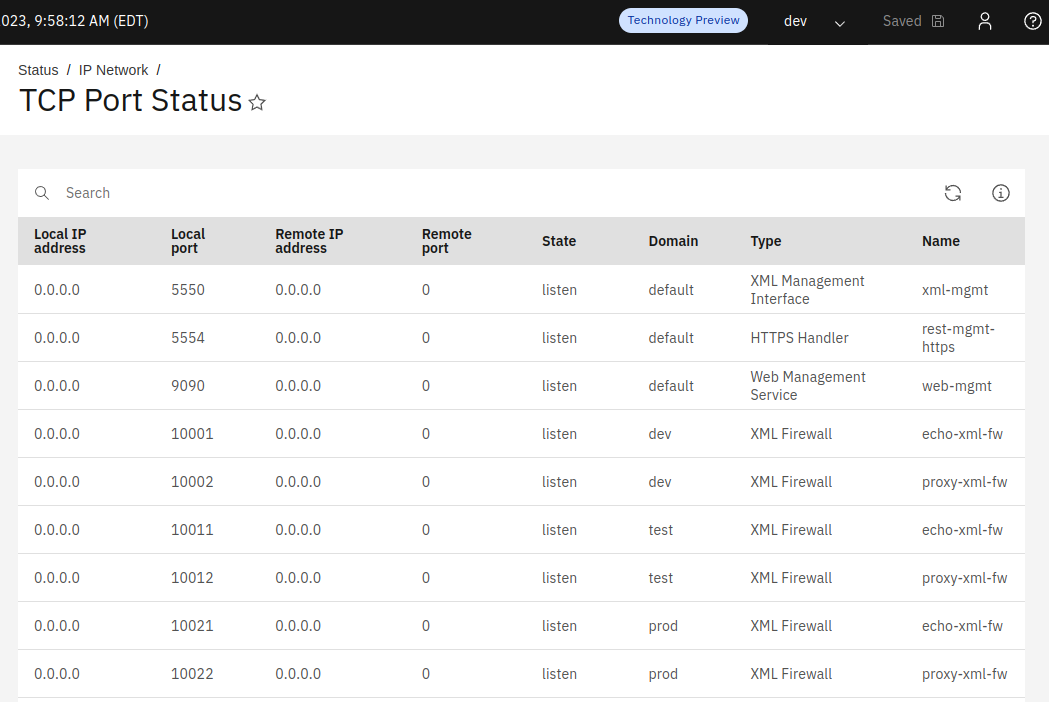

Example applications deployed on DataPower listen on environment specific ports

The previous example was prepared in a self-contained docker-compose environment for demo purposes. It is set up to run 4 containers based on the images of the following products:

You can adapt this example to your environment, based on the code repository and CI/CD product you use. Everything you need is in the example Jenkins pipeline above. The dpctl command is the only ingredient that is not in the public domain so you will need to replace it with more complex custom curl calls or contact us to see how to get the dpctl command to make your pipeline more simple.

Whichever path you take, use the force of the modern DevOps approach to simplify the deployment and maintenance of your DataPower solutions and to track changes you introduce into your DataPower environments.