Introduction

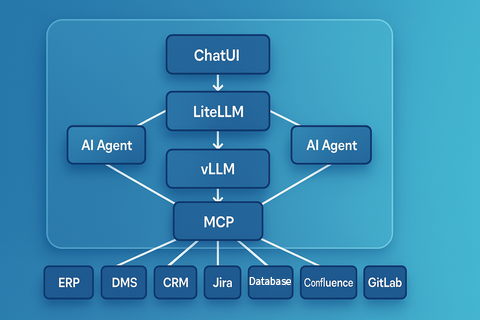

There is a lot of talk about the need for AI business transformation but not much about the practical „how“ to do it. Various challenges like strict data control and compliance requirements, complex integrations, hardware constraints, and security considerations often deter even the most enthusiastic adopters. However new technologies like vLLM which I covered in the last two posts (first, second), open weight LLMs, and the Model Context Protocol (MCP) which I will cover in this one are increasingly effective in addressing these challenges. In this post, I will describe how to use these technologies to build a Self-Hosted AI Platform and integrate it into existing Enterprise IT infrastructure to ignite the spark and unleash the power of AI automation across your enterprise.

We’ll look at a custom AI platform that:

- Deploys self-hosted LLMs using the open-source vLLM engine and open weight LLMs, so your data never leaves your secure environment.

- Integrates seamlessly with your DMS, ERP, CRM, DevOps tools, and more, thanks to the Model Context Protocol (MCP).

- Provides a powerful ChatUI for human-AI collaboration, with advanced agentic automation to handle business tasks.

- Offers full visibility via open-source observability tools (Prometheus, Grafana) and in-depth LLM tracing with LangFuse.

- Secures every layer with your existing identity and access control infrastructure, eliminating any external dependencies.

Why Self-Hosted AI Platform?

Running a self-hosted AI Platform addresses several crucial concerns:

- Data Control & Compliance

Your data never goes through public external networks or third-party servers, which can help meet restrictive regulatory or contractual requirements. For instance, sensitive customer information remains locked within your own network and virtual machines. - Security

Since the entire solution lives in your managed environment, it leverages your existing firewalls, network segmentation, and identity solutions. Any potential leakage or breach is confined to your own infrastructure rather than a SaaS provider. - Customization

Open-source solutions let you shape the platform to your unique needs. You’re free from vendor lock-in and can integrate new tools or frameworks easily. - Performance

Modern hardware (CPUs, GPUs) can deliver excellent LLM serving performance, especially with self-optimization engines like vLLM, whether you rent VMs or have your own.

Ultimately, self-hosted deployments give enterprises peace of mind: all data, logs, traces, and various artifacts remain in-house, drastically reducing risks related to privacy or compliance.

Core Components of the AI Platform

1. LLM Model Runtime: vLLM

At the heart of the platform is vLLM, an open-source library and server specifically optimized for high-performance LLM inference. It is one of the most exciting open-source projects today backed by almost all Enterprise software vendors. I have written a lot about it in previous posts so for more details follow the links.

2. LLM Gateway: LiteLLM

If vLLM is the “brain” (running the model), the LiteLLM is the “traffic controller”. LiteLLM serves as a central gateway to our AI Platform and even in open source variant offers a robust suite of features:

- API Call Tracking: Monitors and logs all API requests to provide detailed insights into usage patterns.

- Batches API: Facilitates efficient handling of batch requests, allowing multiple operations to be processed simultaneously.

- Guardrails: Implements safety measures to ensure responsible AI deployment by monitoring and controlling model outputs.

- Model Access: Manages permissions and controls access to various models, ensuring secure and organized usage.

- Budgets: Enables setting and monitoring of LLM model access limits to effectively manage load associated with LLM operations.

- Rate Limiting: Controls the rate of incoming requests to maintain system stability and prevent abuse.

- Prompt Management: Assists in organizing and reusing prompts to streamline interactions with LLMs.

LiteLLM decouples LLM models from various clients making it easy to replace models or spin up extra capacity without rewriting your LLM client services. It also allows you to enforce access policies, usage quotas, and logging at a central point.

3. Model Context Protocol (MCP) Integration Layer

The real power of a business AI platform emerges when the LLM can pull in data from your actual systems (ERPs, CRMs, DMS, Confluence, Git repositories, etc.) and even take action in those systems. That’s where the Model Context Protocol (MCP) comes in.

MCP is a standard interface that “plugs” AI assistants into various enterprise tools. Think of each corporate system (like Jira, Confluence, GitLab) having its own small MCP server (connector), which translates between AI requests and the system’s native APIs. For instance:

- Confluence Connector: The LLM can request pages or documents, get the text to incorporate into an answer, or reference it for context.

- Jira Connector: The AI can retrieve tickets, summarize them, or even create new tickets if you allow it.

- CRM Connector: The AI can fetch or update customer records.

- DMS Connector: The AI can search your documents or help you update and generate new ones.

- Source Control Management (GitLab, GitHub): The AI can analyze code, propose changes, or read documentation.

By standardizing how AI communicates with these services, MCP greatly reduces integration overhead so you don’t have to write a custom pipeline for every system or every type of AI request. And because you self-host LLM, the data flows inside your secure network, respecting access permissions. The result?

With MCP integrations a single ChatUI or AI Agent becomes a “one-stop shop” for retrieving documents, updating tasks, or automating business processes. MCP unlocks huge business value of AI Platform.

4. Chat UI for AI Retrieval & Business Task Automation

On top of these components is a Chat-Based User Interface (ChatUI) that provides:

- Conversational Interactions: Users can type questions like “What’s the latest project timeline?” or “Draft a status update for the operations team.”

- AI-Driven Retrieval: The AI can tap into document management systems (via MCP) to find relevant data and incorporate it into the response.

- Agentic Automation: If allowed, the AI can also perform tasks such as creating Jira issues, checking CRM entries, updating timesheets automatically, and removing workloads from human staff.

- Security & Permissions: It integrates with your corporate identity management so that only authorized users can see and perform allowed actions.

By consolidating all these features into a simple chat interface, employees have easy access to the full range of AI capabilities from quick Q&A to more advanced agentic tasks. It’s like having a digital assistant always on hand, ready to pull knowledge from across the organization or to handle routine tasks with minimal friction.

5. Tracing and Observability

Tracing an AI conversation or automation process can be tricky. That’s where LangFuse shines:

- Captures and Logs each user query, response, and tool invocation.

- Keeps an Audit Trail of which user asked what, and how the AI responded (including external API calls).

- Supports Debugging or compliance checks with a detailed record of events.

- Facilitates Model Improvement by letting you analyze conversation flows, user feedback, and model performance over time.

With LangFuse, if an employee says “The AI gave me a strange answer,” your AI or IT team can quickly drill down to see exactly which prompt was sent, how the AI responded, and whether it invoked the right MCP connector. Because LangFuse is also self-hosted, all data stays under your control.

Just like any other critical enterprise service, your AI platform needs robust observability. Already proven tools like Prometheus and Grafana will do the work of tracking system health in real-time.

- Prometheus collects metrics from each component (ChatUI, LiteLLM, vLLM, MCP servers) and stores them as time-series data.

- Grafana then visualizes these metrics in dashboards (e.g., you can see LLM inference latency, GPU/CPU usage, request throughput, errors, etc.).

This ensures your operations team can track system health in real-time. The net effect is confidence that the AI platform remains reliable and can be proactively scaled. And again because both components are self-hosted, all data stays under your control.

6. Identity & Access Control

Finally, everything is integrated with your existing Identity Management solution whether that’s Active Directory, Keycloak, or another identity provider. This means:

- Single Sign-On (SSO)

- Role-Based Permissions that govern who can query which systems or run which automation tasks.

- No Data Leaves your environment for authentication or session management.

Your IT security team thus manages AI permissions in the same way they manage any other corporate app, unifying the governance model.

Security & Viability: The Self-Hosted Platform Advantage

Central to this architecture is the fact that all components are self-hosted within your infrastructure whether you own it on-prem or rent it. No traffic leaves your network to consult an external API or run computations on external hardware. This ensures:

- Data Confidentiality: Proprietary or personal data never leaves your servers, drastically reducing the risk of external breaches or compliance breaches.

- Regulatory Compliance: For industries like healthcare, finance, or government, self-hosted AI can help satisfy data residency, privacy, or audit mandates.

- No Vendor Lock-In: Because these are open-source projects (vLLM, LiteLLM, LangFuse, Prometheus, Grafana, MCP), you can fork or modify them if needed, or switch to alternatives.

- Deep Customization: You can fine-tune your own LLM with internal data or inject custom security rules, ensuring the AI’s behavior aligns with corporate policies.

In a SaaS scenario, your data might be encrypted in transit, but ultimately, it’s processed on someone else’s servers. For many organizations, that’s not acceptable particularly for intellectual property or sensitive customer information. A self-hosted approach eliminates that risk.

Example Business Use Cases

- AI-Powered Knowledge Retrieval:

A new hire could ask, “What’s our process for expense reimbursement?” and the LLM would gather the relevant Confluence page or policy from the Document Management System, returning a concise summary. This quick Q&A reduces time spent searching intranets and outdated PDFs. - Agentic Software Development:

Your developers can ask, “List the open Jira bugs for Project X,” and the AI can query Jira via MCP. They might then say, “Create a new branch for bug #123 and draft a fix,” prompting the AI to interact with the SCM (e.g. GitHub) to set up the branch and propose code changes. All with proper auditing in LangFuse. - CRM and Workflow Automation:

A salesperson might say, “Update the CRM with the notes from my last meeting with Client A,” and the AI, referencing the conversation transcript, can automatically insert those notes into the CRM system. No manual copy-paste required. - HR or Finance Bot:

Staff can ask about pay periods, retrieve timesheet entries, or fill out internal forms. The AI automates repetitive tasks, letting human teams focus on higher-value activities.

Every conversation is logged (for compliance), and every action is performed under the user’s identity. This synergy is powerful and saves countless hours across departments.

Future Outlook

- Custom Fine-Tuning: As you gather data from real interactions, you might fine-tune your LLMs to better reflect your organization’s domain knowledge.

- Additional Connectors: With MCP, it’s straightforward to add new systems or data sources.

- Advanced AI Workflows: You can go beyond Q&A to create multi-step “agentic” tasks that combine multiple connectors (e.g., retrieving a spreadsheet from ERP, processing it, and sending a summary email).

- Continuous Model Upgrades: Track the open-source LLM ecosystem to adopt new models that might offer better performance or domain specialization.

- Enhanced Security & Compliance: With everything in your environment, you can do penetration tests, code audits, or policy enforcement further strengthening trust in AI adoption.

Conclusion

By combining vLLM for on-prem large language model inference, LiteLLM for unified gateway routing, MCP connectors for enterprise integration, LangFuse for tracing, and an open-source observability stack, you can build a robust, secure, and enterprise-ready AI platform entirely within your environment. Your employees will be able to use powerful AI-driven assistance retrieving knowledge, automating tasks, and collaborating in real time all without risking data leaks or compliance headaches.

This self-hosted approach offers the best of both worlds: the flexibility and innovation of open-source AI alongside the strict security and complete control that modern enterprises demand. Whether you’re in finance, healthcare, manufacturing, or any other sector with sensitive data, this architecture proves that leading-edge AI is possible without relying on external SaaS providers.

Ready to explore how AI can transform your organization? Consider piloting this architecture in a sandbox environment with a single LLM model and a few MCP connectors. Once you see the productivity gains and peace of mind it offers, you’ll be well on your way to a more automated and intelligent enterprise on your own terms.

Contact us to find out more or join one of our Croz AI Business Innovation Sprints. The next one is in Munich and you can apply on the link.