Welcome back, let’s continue our exploration of AI-powered search! Or if you landed here before reading the first part of this blog series, make sure to head here for better understanding and some context.

AI augmented search

The rapid advancement of artificial intelligence over the last couple of years has enabled many fascinating and useful applications – one of which is the possibility of improving and simplifying the search experience. Let’s go over the most common ways artificial intelligence can be used to improve search, and see how they differ from traditional approaches.

Embedding search

Embedding search, a core component of semantic search, is a type of search technology that interprets the meaning of words and phrases. This enables it to return results based on meaning rather than just keyword matching.

For a simple example, consider the following. Let’s say a user wants to buy active noise cancellation (ANC) headphones but doesn’t know the exact name of the ANC technology.

With normal search, they’d struggle to find the right query. Without knowing the exact term, retrieving relevant results would be difficult. However, with embedding search, the user can make a query describing what he needs, for example:

“headphones which strongly and automatically block out noise”

The embedding model, which understands word meanings, can retrieve ANC headphone products when given this query, recognizing what the concept the user is describing is very similar to ANC headphones.

Semantic search works through embeddings – numerical representations of words/phrases in a vector space. Using a special type of machine learning model called an embedding model, a numerical vector is generated for the given text. The embedding model encodes meaning of words into its representations by placing similar words closer together in the vector space – vector representation for “brown dog” will be closer in the vector space to “gray cat” than “rocket thruster.”

Vector similarity is usually measured using some kind of similarity metric; a mathematical function that takes two vectors and outputs a number between 0 and 1, indicating how similar two vectors are. Most common similarity metrics are Euclidean distance (also known as L2 norm), dot product and cosine similarity.

Out of these, cosine similarity is probably the one you’re going to come across the most in text embedding applications, as it has a very useful property of scale invariance. This means it emphasizes the relative orientation of vectors, ignoring differences in their magnitude. In other words, even if the two texts being compared greatly differ in length, if most of their meaning is the same they will still be assigned a high similarity score.

Vector representations are high dimensional, usually consisting of 768 or 1536 dimensions. Standard databases aren’t capable of efficiently storing and searching vectors this large, making a need for an alternative database type – vector databases. These databases are designed to store and search high dimensional vectors efficiently. The speed is usually achieved by using specialized data structures and algorithms, which include:

- tree type structures (k-d tress, VP trees)

- proximity graphs (Hierarchical Navigable Small World graphs)

- vector compression (product quantization, ScaNN)

- and many more

Out of these approaches, Hierarchical Navigable Small World (HNSW) graphs are the one you’ll most often find in the majority of vector database implementations, due to high speed and excellent scaling.

These approaches to vector search fall under the domain of approximate nearest neighbour search (aNN) – approaches where the algorithm sacrifices some precision for massively increased speed of retrieval. This means that aNN algorithms do not guarantee that the N vectors they return are the absolute closest to the target vector; however, all the returned vectors will still be very similar to the target vector.

On the other hand, the approach where the returned vectors are in fact guaranteed to be the N closest ones is called k-nearest neighbour (kNN) search. Although it guarantees precise results, it is rarely used in real-time applications due to high computational cost. In practice and for most use cases, aNN algorithms’ precision is more than enough.

Hybrid search

Based on the previous paragraph, it would seem that embedding search is superior to traditional search in every aspect and answers to all of our original quarrels with search. However, embedding search is not perfect and does have its drawbacks. To name only a few:

- Lack of precision for exact matches

Semantic search is excellent at finding contextually relevant data, but, due to the nature of embedding search, it might deprioritize exact matches or rank them lower in some cases. This ability, to be able to exactly match a given query is especially important in some domains, such as legal or medical. - Poor performance with rare/out-of-vocabulary words

As embedding models used in semantic search are trained on large text corpora, they are very good at generalizing and capturing general meaning; however, they may struggle with rare or out-of-vocabulary words or perhaps new terminology introduced after the model was trained. One way of fixing this issue is fine-tuning the embedding model on a dataset similar to the target domain. - Overgeneralization

Another issue that can arise with embedding search is overgeneralization – the search algorithm retrieves results that are loosely related but not directly relevant to the query. This happens because embedding models can sometimes over-generalize, returning documents that are conceptually close but might not address the user’s actual intent.

It seems that we at our disposal have two imperfect search strategies – traditional and semantic search, each coming with certain benefits and downsides. So why not just combine them?

This is exactly the idea behind hybrid search. Hybrid search combines traditional keyword-based retrieval (e.g., BM25) with semantic, metadata-based, or entity-based search techniques to improve relevance. Usually, separate traditional (i.e., BM25) and other type of search (i.e semantic, entity-based etc) are done, and the results are combined using a merging algorithm, usually reciprocal rank fusion (RRF), and a unified result set is produced. In a way, this is also a type of reranking algorithm.

Hybrid search is an excellent and often-used approach, as it combines the strengths of both traditional and semantic search in a simple manner.

Retrieval augmented generation

On November 30, 2022, a new machine learning model was released. Although the release was somewhat anticipated and welcomed by the enthusiasts in the machine learning world, it didn’t catch the public’s eye. Yet.

That was the day ChatGPT and its underlying model, GPT-3.5, launched—marking the moment the AI world as we knew it changed forever.

This model, known as a large language model (LLM), could engage in human-like conversation, answer questions, and generate text with an unprecedented level of coherence. It took only 5 days for the service to reach 1 million active users, the fastest growth in Internet history. Due to its ability to generalize and adapt to different tasks, it wasn’t long before people applied it to many different use cases, from writing emails and summarizing text, all the way to programming and AI agents. It seemed there was no limit to this new technology. Some even suggested that it was the first version of AGI and that the singularity was right around the corner.

However, the truth was a bit more grounded, and the first limitations of LLMs soon came to light. Reports of hallucinations—instances where the LLM generated false or misleading information, along with a general lack of domain-specific knowledge, emerged as the most reported issues.

There are two main reasons behind these issues. First, these models generate text by predicting the most likely next word based on patterns learned from vast amounts of data. This means they can produce responses that sound convincing but are, at times, completely incorrect. Second, their knowledge is limited to the data they were trained on, which typically has a cutoff date, often months or years before deployment. As a result, LLMs may lack awareness of recent events, new research, or evolving industry trends. While they can generalize across many topics, they struggle with highly specialized or niche areas where training data is sparse or outdated. This makes their application to domain-specific tasks challenging.

There isn’t much end users can do to solve the first issue – this task is left to the AI scientists researching new architectures and algorithms that mitigate the issue. However, there is a way to mitigate the second issue – retrieval-augmented generation (RAG).

The idea behind RAG is simple – instead of relying solely on the model’s training data for information, RAG enhances its responses by retrieving relevant information from an external source, such as a database or a document repository, and then passing it to the LLM before generating an answer. A good way of thinking of a RAG system is like a librarian in a library. The LLM is the librarian, fetching relevant books before answering a question, using them to augment and fact-check their already vast knowledge.

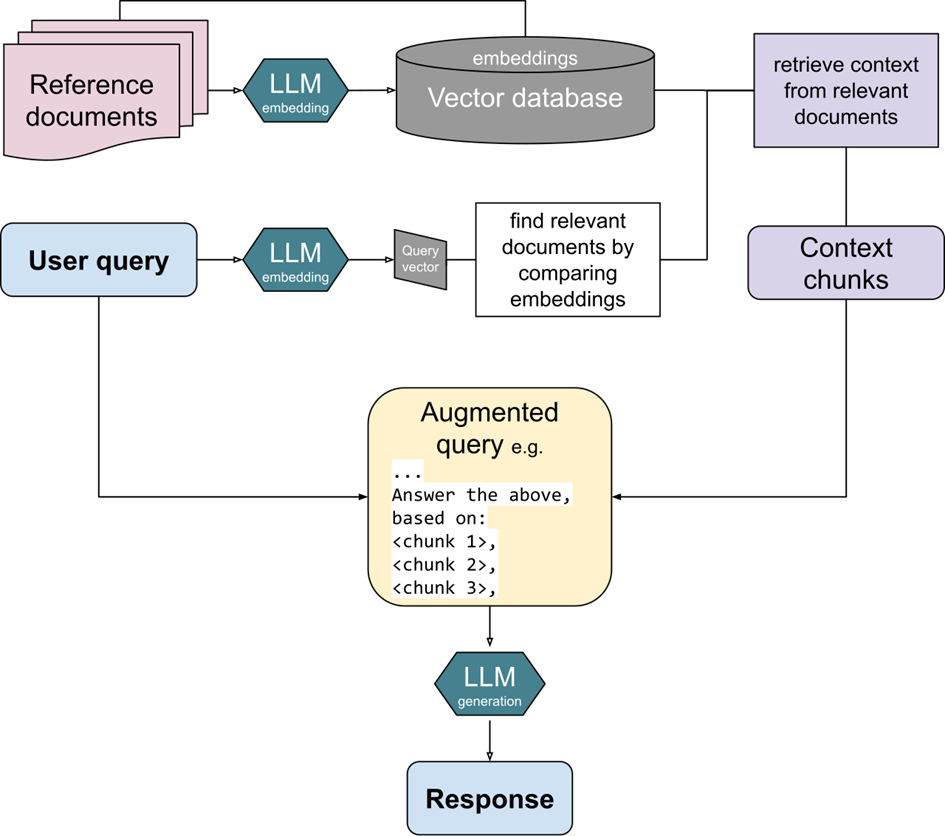

The RAG pipeline is illustrated in the following image:

There are three main components of a RAG system:

- reference documents

- vector database + embedding model

- LLM

Once the user types in their query, an embedding of the query is generated. Using embedding search, a list of relevant document chunks is fetched from the vector database. The retrieved chunks are appended to the original query, forming an augmented query that provides additional context. Finally, this augmented query is passed to the LLM as a prompt, enabling it to generate a response that is more accurate and based on relevant external information.

As an additional bonus, RAG effectively enables the user to query the document database and “talk” with the data – instead of just being presented with the queried information as they would with traditional search, RAG enables the user to ask the LLM for clarification about the retrieved data, additional information or even documents similar to one found and used as reference.

To sum up, retrieval-augmented generation is a simple and cost-effective way to augment the answers of an LLM with your domain-specific data. It is faster, simpler and less expensive than fine-tuning the model on new data, in addition to being more modular – when new data is added to the database, the system can immediately take advantage of it without requiring any retraining.

Image search

So far, we’ve looked at different ways to organize and search textual documents and how machine learning can help retrieve useful information. But we’ve only focused on one type of media—text. While text is what most people associate with search, a huge amount of today’s information is in image form. Being able to index and search images not just by their metadata but by their actual content is becoming increasingly important.

Consider the following scenario: you are a proud owner of a web shop selling various unique clothing items. You followed all the best practices outlined previously in this blog, and now your webstore supports normal embedding and hybrid search of the names and descriptions of all your items, allowing your customers a seamless search experience. You even have a chatbot backed by retrieval-augmented generation, enabling users to explain their preferred style and have the chatbot suggest items that might best fit.

However, despite positive feedback for your chatbot, you encounter one suggestion: The user is attempting to find a specific blue-and-white dress with a distinct pattern and stitching, or at least something as similar as possible. They have a picture of it – their friend has it; they’re sure it was purchased from your store, but couldn’t find it based on their description. If only they could upload the photo to your chatbot—they’re convinced it would help them find the dress instantly. After all, a picture is worth a thousand words.

Now consider a different scenario: A medical database enhanced with RAG where physicians can quickly describe a patient’s symptoms and verify their diagnoses using reliable sources such as research papers and case reports. An excellent addition to this system would be allowing doctors to upload X-rays, MRIs or lab culture images—key elements in making accurate diagnoses.

Image search provides an optimal solution to these challenges by enabling retrieval based on visual similarity rather than textual descriptions. Image search is structurally similar to text-based embedding search, but image embeddings capture visual features (e.g., texture, color, shape) rather than semantic meaning. It consists of two components: an image embedding model and a vector database used to store embeddings. The embedding model is some variation of a convolutional neural network (CNN) or vision transformer (ViT).

The embedding model converts images into embeddings capturing essential visual attributes such as shapes, textures, and colors, even higher-level concepts such as people or objects.

The resulting embeddings ensure that two images with similar features or showing similar objects are going to be closer together in the resulting vector space than two dissimilar or unrelated images. The embeddings are created for the entire document base and stored in a vector database, which we already mentioned in chapter 3.1. When a user uploads an image, it undergoes the same transformation, and the system retrieves the closest matches based on cosine similarity or other distance metrics, such as Euclidean or dot product similarity.

Applied to our webshop scenario, this means customers could take a photo of an item they like, which would be encoded into an embedding and compared against the product catalogue in real-time, finding the most visually similar items. In the medical scenario, physicians could upload an X-ray or MRI, and the system would fetch cases with similar (pathological) features, assisting in diagnoses.

An additional advantage of modern multimodal models, such as OpenAI’s CLIP, Google’s ALIGN or an open source solution like OpenCLIP is the possibility of cross-modal retrieval – meaning users can search for images using textual queries. For example, the user could type “blue floral summer dress” and the system would retrieve the most relevant images, even if those words don’t explicitly appear in product descriptions. Similarly, a radiologist could enter “lung nodule with irregular margins,” and the system could return matching scans.

Next steps

In this chapter we examined all the different ways machine learning and artificial intelligence can be used to enhance your search experience, giving us a strong theoretic foundation we need to understand the next, and final part of this blog series – creating a demo search application showcasing everything we’ve learned so far.