Databricks

In the rapidly evolving landscape of big data processing, cloud-based platforms have emerged as the go-to solution for handling vast amounts of data efficiently and economically. Among these, Databricks on Amazon Web Services (AWS) stands out as a powerful and versatile platform that is designed to help organizations process, analyse, and derive valuable insights from massive volumes of data. Developed by the team that originally created Apache Spark, Databricks provides an optimized and streamlined environment for big data analytics and machine learning tasks. By leveraging the AWS infrastructure, Databricks can handle diverse workloads, enabling data scientists, analysts, and engineers to collaborate efficiently on data projects in real-time.

In this blog post, we will present a performance comparison between a Databricks Jobs Compute Cluster and Jobs Compute Photon Cluster. We conducted a series of tests using different workloads on both clusters to evaluate their efficiency. The goal was to identify any potential improvements and to determine how much performance is gained and if it’s worth the price difference. By understanding the fundamental differences and exploring their respective strengths and weaknesses, organizations can make informed decisions regarding their big data processing strategies.

What is Photon?

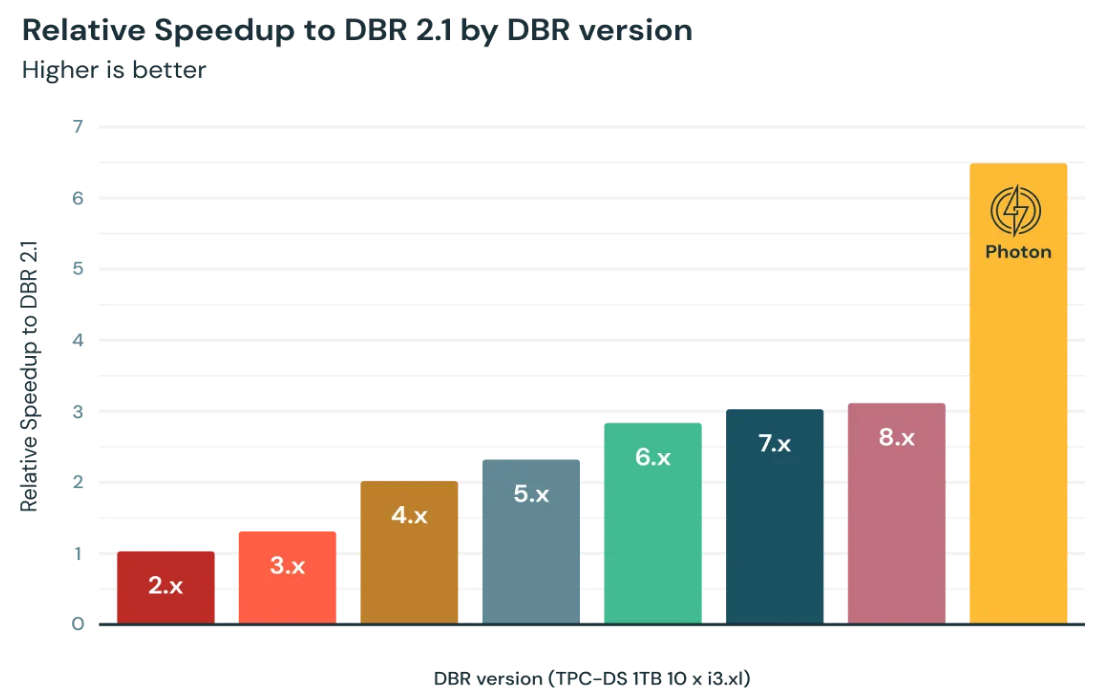

According to Databricks, “Photon is the next-generation native vectorized engine developed in C++ to dramatically improve query performance”. In essence, Databricks has rewritten certain parts of the Spark engine in C++, and it can replace the native Spark query engine for a subset of queries. The main goal of using Photon is to harness the full potential of modern hardware while seamlessly integrating with your existing Apache Spark APIs.

To take advantage of Photon, ensure your cluster is running Databricks Runtime 9.1 LTS or above. After that, adopting Photon is a breeze – simply upgrade your cluster’s Databricks runtime to a Photon runtime and you’re ready to go. But despite its ease of adoption, Photon represents a substantial evolution.

Photon is optimized for accelerating queries that involve large volumes of data, typically exceeding 100GB, and include complex aggregations and joins. It also truly shines when data is accessed repeatedly from the disk cache, resulting in faster performance and reduced latencies.

Additionally, Photon provides more robust scan performance for tables with numerous columns and many small files, making it a versatile solution for various data scenarios.

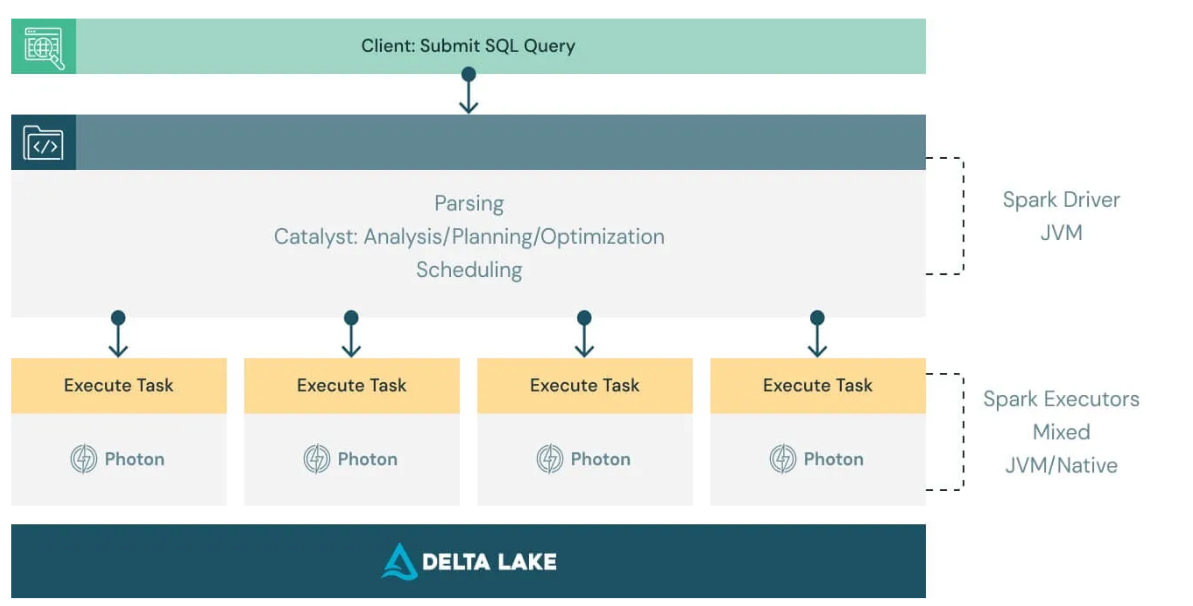

Below is a “lifecycle of a query” so we can better understand how and where Photon comes into play.

Source: Databricks

When interacting with the Spark driver, the submitted queries or commands undergo parsing and the Catalyst optimizer does the analysis, planning and optimization, following the usual process as if there was no Photon involved in the process.

However, Photon introduces a notable distinction in the runtime process. After the Catalyst optimization, the runtime engine takes over, and it examines the physical execution plan to identify which parts can be processed using Photon. Some minor adjustments may be applied to the plan, like transforming a sort merge join into a hash join. Despite these modifications, the overall structure of the plan, including the join order, remains unchanged. It’s worth noting that Photon does not currently support all the features available in Spark, which can lead to queries executing partially in Photon and partially in Spark. Nevertheless, this hybrid execution model remains entirely transparent to the end user.

Once the query plan is prepared, it is divided into atomic units known as tasks, which are then distributed for execution across worker nodes. These tasks operate on specific data partitions and run in threads. At this stage, the Photon engine comes into play. It essentially replaces Spark’s whole stage codegen with a native engine implementation, ensuring efficient and optimized execution.

And while Photon excels in numerous scenarios, it is essential to understand its limitations to make the most of this powerful engine. Some of the most important limitations are:

- Query Duration: Photon is not expected to significantly improve short-running queries (queries taking less than 2 seconds) involving small amounts of data.

- Non-Photon Features: Features not supported by Photon will run the same way they would without using Photon, without any additional performance advantage.

Spark application

To compare the different engines, we developed a Spark application that generates a dataset that is later used for training a fraud detection model.

We used the raw data on credit card transactions (2.19 GB) available here. These transactions cover the timeframe spanning from 1991 to 2020.

A Spark app uses the following scripts:

1. preprocess.py

- as a module, contains preprocessing methods converting raw transactions into a more usable format (converting columns to correct datatypes, forming new columns, etc.)

- as a standalone script, takes a dataset as an argument, pre-processes it and saves it

2. profiles.py

- module offering a method to calculate aggregations for a user/merchant in the last year on a monthly basis

3. generate_dataset.py

- main script computing a feature dataset from a raw, pre-processed dataset

- features include long and short-term aggregations, user profiles, and feature ratios

Cluster configurations

Both clusters were running the same number of workers during testing. We experimented with employing 6 and 9 workers, but the discrepancy between clusters remained consistent. Put simply, whenever we conducted tests with 9 workers instead of 6, both clusters exhibited approximately 33% improved outcomes. Therefore, for comparison purposes, we will only consider the distinctions between clusters when both employ 6 workers.

During cluster configuration, we opted for the m4.xlarge Worker type for the non-Photon Jobs Compute cluster and m5d.xlarge for the Jobs Compute Photon cluster. Both worker types come with 16 GB Memory and 4 cores each. Hence, employing 6 workers of this kind results in a total memory of 96 GB and 24 cores in total.

Cluster comparison

To test how using Photon would affect our previously described app, we ran a series of tests on the full dataset, as well as the reduced dataset with the more recent data (using only the transactions after 2010).

As we said earlier, Photon offers faster performance when data is accessed repeatedly from the disk cache, so we decided to also compare the difference between the two clusters when using cache utilization.

Across all the test cases, the Photon cluster consistently outperforms the non-Photon Jobs Compute cluster showing an average improvement of a little over 21% on the full and reduced datasets.

Where we really start to see the big difference is when we start using some of the previously mentioned Photon advantages. In our case, we used caching on both clusters as both support caching; however, Photon has been specifically designed to capitalize on caching’s benefits, demonstrating a clear advantage over the non-Photon Jobs Compute cluster in scenarios where caching is employed.

With caching enabled, the Jobs Compute cluster demonstrated some serious improvement in performance, as repetitive data access was reduced. However, the real advantage of caching became apparent when we analysed the Photon cluster’s results. Using Photon showed an impressive average improvement of around 65% in comparison to the non-Photon Jobs Compute cluster.

We experimented with cache utilization and explored cache-accelerated worker instance types, which come pre-configured for optimal disk cache performance. The recommended (and easiest) way to use disk caching is to choose a worker type with SSD volumes when you configure your cluster. These cluster types offer a good balance between a simple Jobs Compute cluster and a Jobs Compute Photon cluster. We observed a significant 25% performance improvement with cache-accelerated workers compared to the standard cluster, all while being more budget-friendly than the Photon option. This improvement in performance also comes at a relatively modest price increase. Therefore, if absolute peak performance is not a strict requirement, considering cache-accelerated workers could be a viable alternative to Photon. They consume fewer Databricks Units per hour (DBU/h) while still providing a performance boost.

Conclusion

In this performance comparison between the Databricks Job Compute Cluster and the Photon Cluster, we have seen compelling evidence of the Photon Cluster’s superiority in terms of data processing speed and efficiency. However, it is essential to consider the cost implications when choosing a cluster as Photon instance types consume DBUs at a different rate than the same instance type running the non-Photon runtime.

In our case, Photon originally offered about 21% improvement, but it used 13.8 Databricks Units per hour compared to the 7.5 Databricks Units per hour used by the non-Photon Jobs Compute cluster. That resulted in running costs being about 84% higher while offering only 21% improvement. Using Photon became worth it only after enabling cache utilization in our Spark application. Therefore, when choosing between the Databricks Job Compute Cluster and the Photon Cluster, organizations should carefully assess their specific needs, workloads, and budget constraints. For data-intensive tasks that require optimal performance and the use of advanced features, the Photon Cluster is undoubtedly a superior option. On the other hand, for more general data processing tasks that do not demand high-performance optimizations, the Databricks Job Compute Cluster can provide a cost-efficient solution without compromising on essential functionalities.

Jobs Compute Photon Cluster is the ideal choice when:

- Dealing with large datasets that require complex aggregations and joins.

- Frequently processing data that can be cached for subsequent queries.

- Time-sensitive analytics where faster results are crucial, and budget permits the higher DBU consumption.

Jobs Compute Cluster is preferred when:

- Dealing with smaller datasets and relatively simple processing tasks.

- Budget constraints are a significant consideration, and the trade-off between performance and cost is crucial.

- Short-running queries where Photon’s acceleration may not provide significant benefits.

In summary, the decision to opt for the Photon Cluster or the Databricks Job Compute Cluster should be driven by the desired balance between cost and performance. For mission-critical tasks where performance is paramount, investing in the Photon Cluster will yield substantial benefits. For other scenarios, the Databricks Job Compute Cluster can deliver satisfactory results while staying within budget constraints. A thoughtful evaluation of these factors will enable organizations to make an informed decision that aligns with their specific requirements and priorities.

If you would like us to help you on your Databricks journey, don’t hesitate to contact us at [email protected].

Also, check out our Spark courses offering, if you’d like to build your Spark skills