Howdy AI friends,

Welcome back from the summer sizzle! Just like shedding those extra pounds after a beach vacation, diving back into the world of AI can feel daunting. But fear not, this isn’t a weight loss edition – we’ve got a hearty topic on the menu: AI platforms.

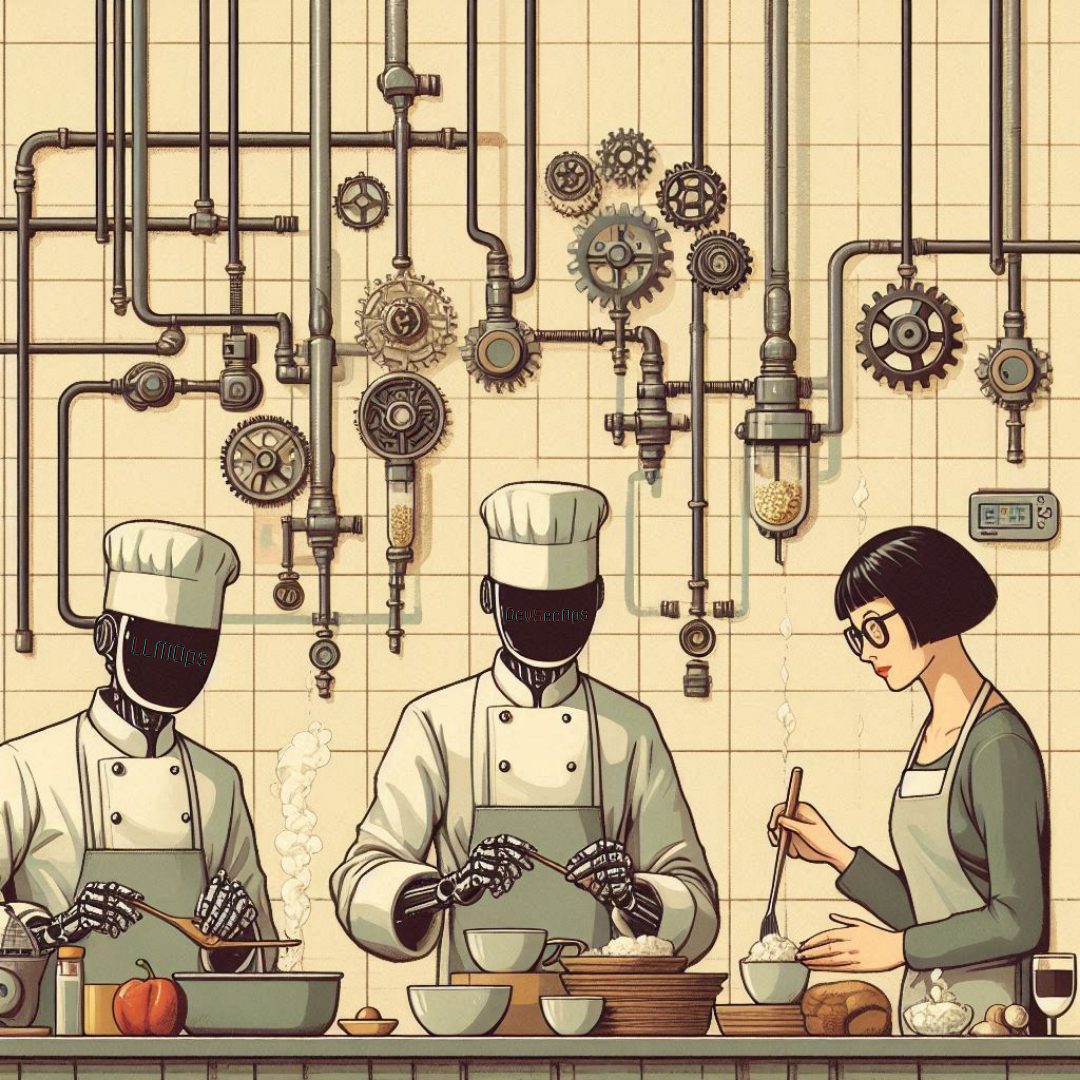

Think of what is an AI platform. My imagination goes to mastering French cuisine. It’s a cornerstone of the culinary arts, a must-know for any aspiring chef. Similarly, AI platforms are the backbone of any AI endeavor. They’re complex but essential.

I’ve been mulling over the best AI platforms lately and was reminded of an insightful LinkedIn discussion where authors nailed it when they emphasized the importance of a solid platform strategy rooted in proactive engineering.

It all made me think what’s behind the curtain?

Let’s break down the key socio-technical ingredients: DevOps for a smooth-running AI kitchen, Kubernetes as the scalable infrastructure, and the human element – people and stakeholders with the right skills and mindset.

AI Platforms – The Holy Ops – call it DevSecOps, MLOps or LLMOps

DevOps practices are not just integral, they are the heart of AI platforms. They facilitate the continuous integration and delivery of machine learning models, automating repetitive tasks and reducing the time it takes to get a model from development to production.

With the recent explosion of Large Language Models (LLMs), we’ve got a new sous-chef on the block: LLMOps. It’s specifically designed to handle the unique needs of LLMs, making sure they’re prepared and ready to serve up impressive results. LLMOps tools integrate seamlessly with your existing CI/CD pipelines and work in all kinds of kitchen environments, from cloud-based to on-premise. I like this video from Neptune.ai since it has a point about the proximity between DevOps and LLMOps. In my opinion, these latter can be implemented using DevOps best practices. I believe this would ensure consistency and alignment in the process, for example, between the data scientists fine-tuning LLMs and the engineers involved in testing.

Kubernetes: because tasty biscuits have good containers!

Imagine Kubernetes as the airtight container that keeps your biscuits fresh and delicious. In the AI world, it’s the orchestration layer that keeps your machine-learning models running smoothly. Kubernetes manages the deployment, scaling, and operation of containerized applications, ensuring your AI infrastructure is always up to snuff.

Filip Stetic’s technical blog provides an excellent recipe for setting up Kubeflow, a popular tool for deploying ML workflows. This blog explains very clearly how Kubeflow helps AI platforms efficiently allocate computing resources so your models can be trained, tested, and deployed without any fuss. I also loved this blog about how Kubeflow integrates with MLOps because it is a hands-on tutorial that guides the reader on the main building blocks of a Kubernetes deployment.

Set your AI platforms free

Azure, SageMaker, Vertex AI, and DataBricks offer a complete package for managing AI projects. These AI platforms provide a range of tools and services for building, training, and deploying machine learning models. Think of these platforms as all-in-one kitchens. They’re designed to help organizations scale up their AI operations, especially in cloud-native environments.

But what if you prefer a more customizable approach? A self-hosted, multi-cloud environment gives you greater control over your AI infrastructure. In this blog, I discuss how Red Hat OpenShift AI stands out in this area. Its hybrid multi-cloud flexibility and ability to handle large workloads with Kubernetes are particularly impressive, offering a high degree of customization and control.

If you want a full dive into Red Hat OpenShift and its open-source approach to AI platform management, you should watch this video by Mate Bogovic, Dino Simicev, and Francisco Javier Lopez Grüber.

Adding a sprinkle of human touch to the AI platform

AI platforms for business are not just about the technical components, they are about building a collaborative team culture. As discussed in depth at QED 2024 and the 0800-DEVOPS podcast with Patrick Debois, dedicated teams with specific knowledge and skills are crucial for success.

I was particularly impressed by Sasha Schärich’s talk on this topic. His holistic approach emphasizes the importance of building AI systems that are not only technically sound but also aligned with broader organizational objectives.

And there you have it, folks! A hearty helping of AI platforms served with a side of DevOps, Kubernetes, and a dash of human collaboration. But remember, even the best chef needs new recipes to keep things interesting. Next time, we’ll dive into the world of RAGs (Randomly Initialized Generative models) and model distillation – imagine experimenting with new ingredients and techniques to create even more delicious AI dishes.

Stay tuned!